Week 4

As the midpoint review is coming, we want to move forward to ideation and brainstorming. Before that, we did a overall systematic analysis.

Topic guide:

- This method helped us break-down the brief into seperate topics for research.

- Developed the questions for further research from noticing the areas that lacked information.

- Going forward the topic guides kept branching out as we did further in-depth research.

Thematic networks:

- Even though overall AI is perceived to not have a personality, voice-based AI is largely perceived as having one.

- AI should give useful, unbiased and direct information in a respectable manner.

- There is low perceived threat from AI because most people are conscious of it’s usage and believe it cannot and should not replace humans and human attributes.

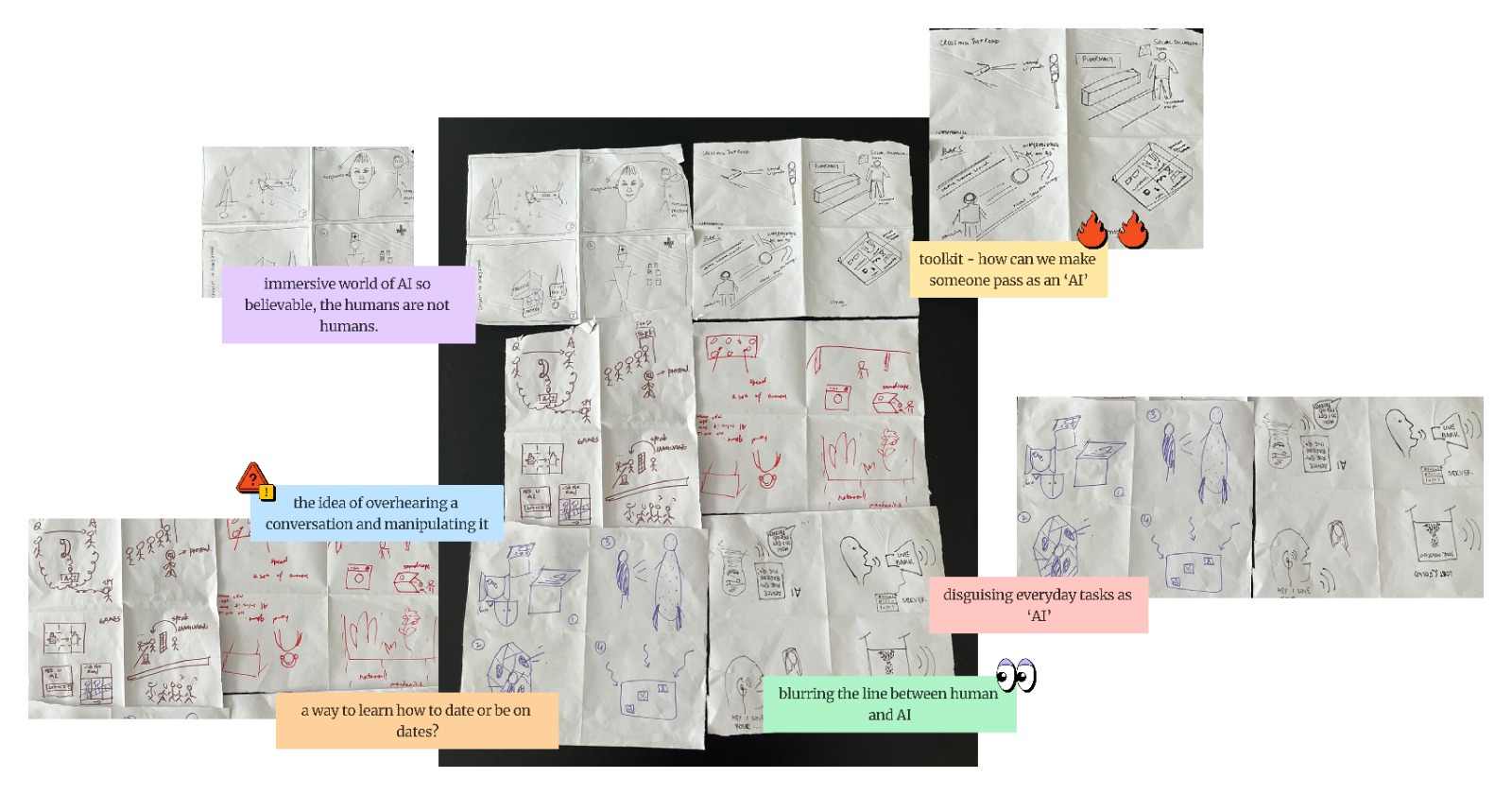

Brainstorming - Crazy 4’s

Crazy 4 is a useful method for generating ideas which requires to draw/write each idea in one minute. While we doing this method, we didn’t have any limitations of topic areas, so we came up a lot of interesting ideas including aspect of sound, experience, visual elements, etc.

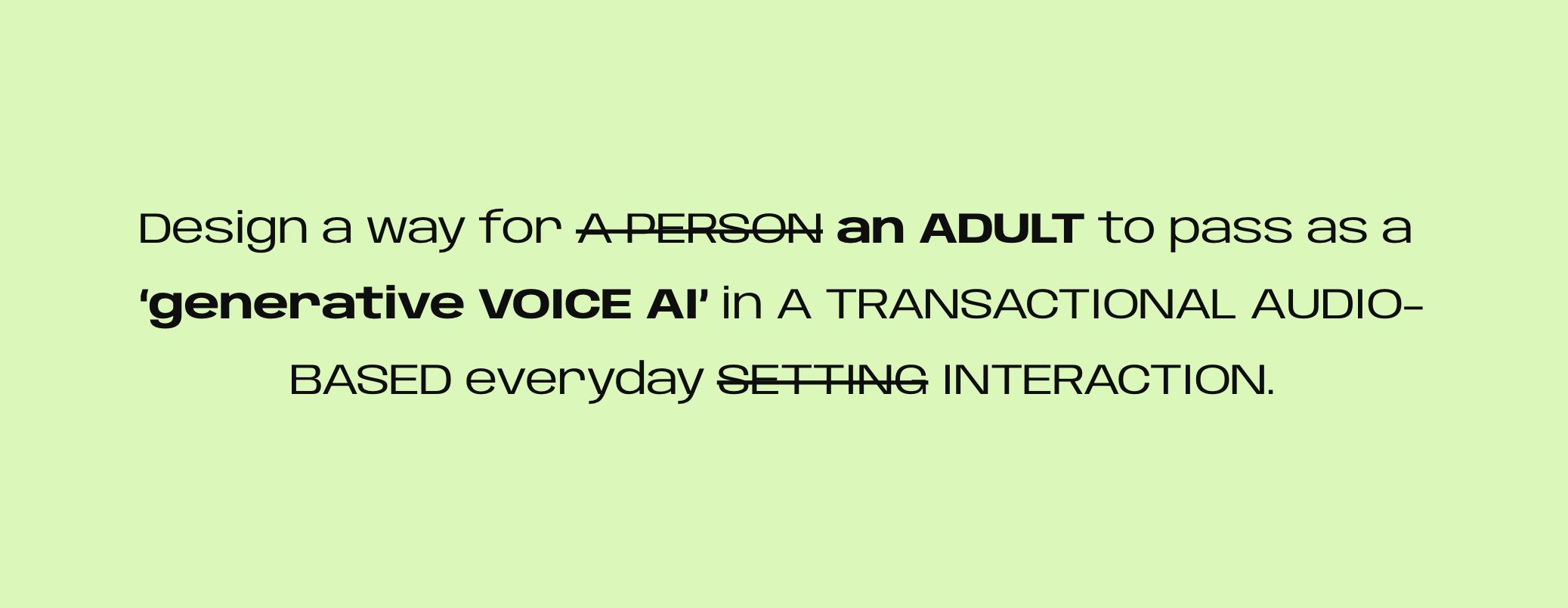

We had our top six ideas, we found the toolkit ideas is the most strong one as we analyzed the tool kit is to help the human pass as AI which focus on the experience of AI that human pretended, and the rest of ideas focus on the experience of human.

- Toolkit - how can we make someone pass as an ‘AI’

- Test Your Dating Skills- character types - a way to learn how to date or be on dates?

- AI world - Immersive world of AI so believable, the humans are not humans.

- Installation - Blurring the line between Human and AI

- Spying - The idea of overhearing a conversation and manipulating it

- Soundscape - disguising everyday tasks as ‘AI’

We had our top six ideas, we found the toolkit ideas is the most strong one as we analyzed the tool kit is to help the human pass as AI which focus on the experience of AI that human pretended, and the rest of ideas focus on the experience of human.

Sound and AI tool exploration:

- Generates responses based on prompts and varies with each prompt.

- AI-generated data is not really new because it re-generates existing data.

- The responses can be fairly complex and long.

At this stage we became a little interested in exploring sound and voice-based AI.

Based on what we had explored, we made a short video:

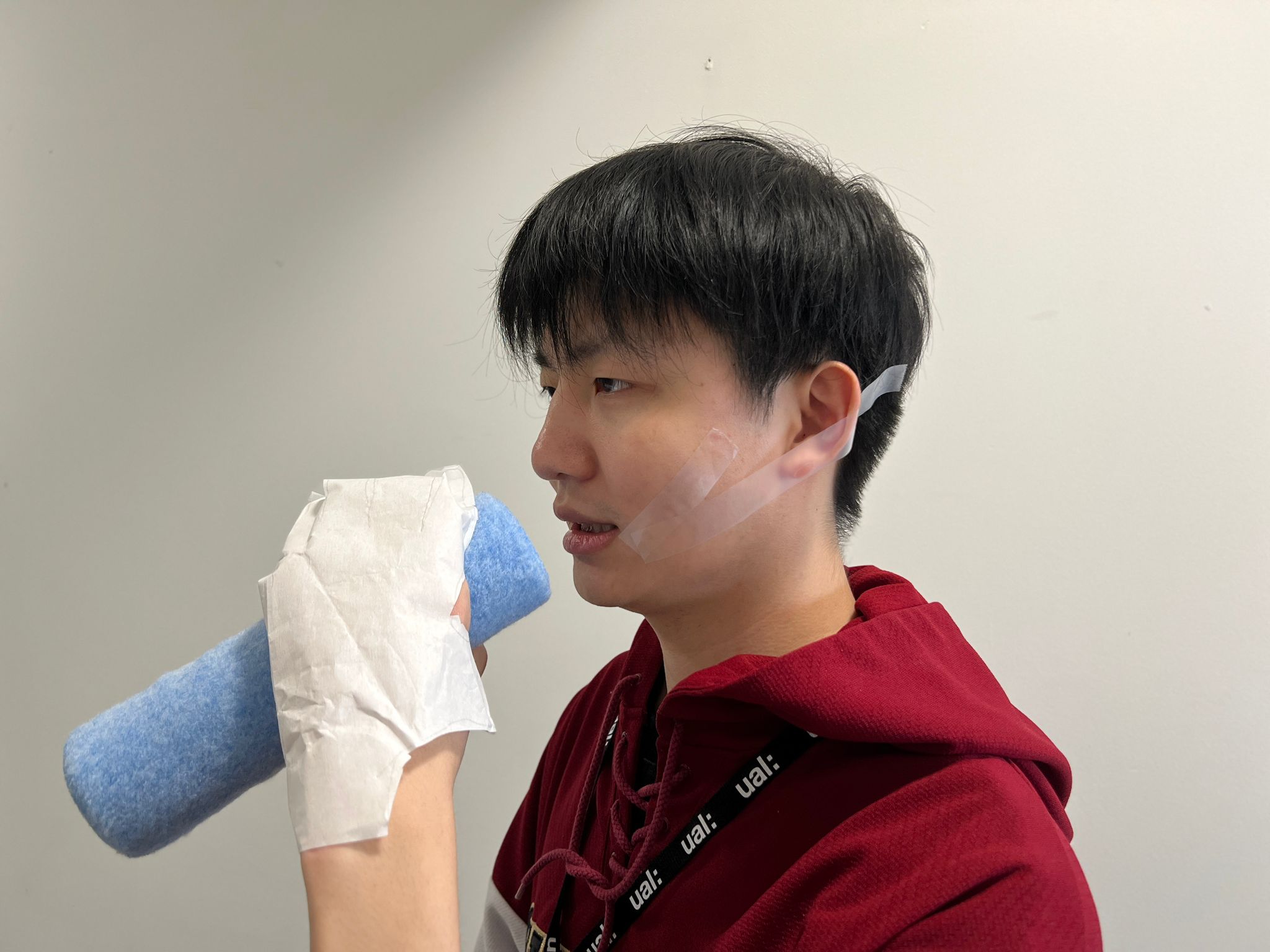

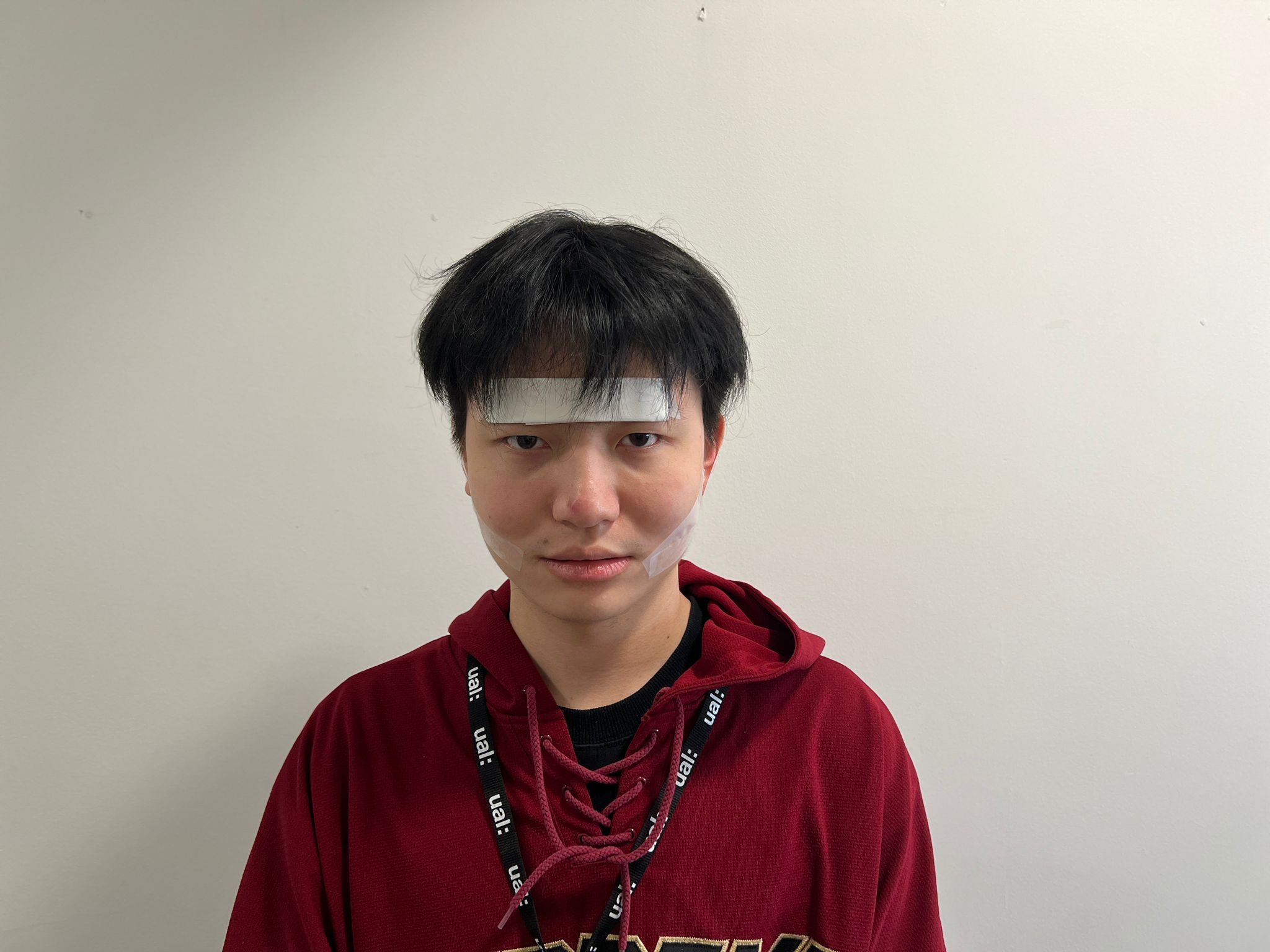

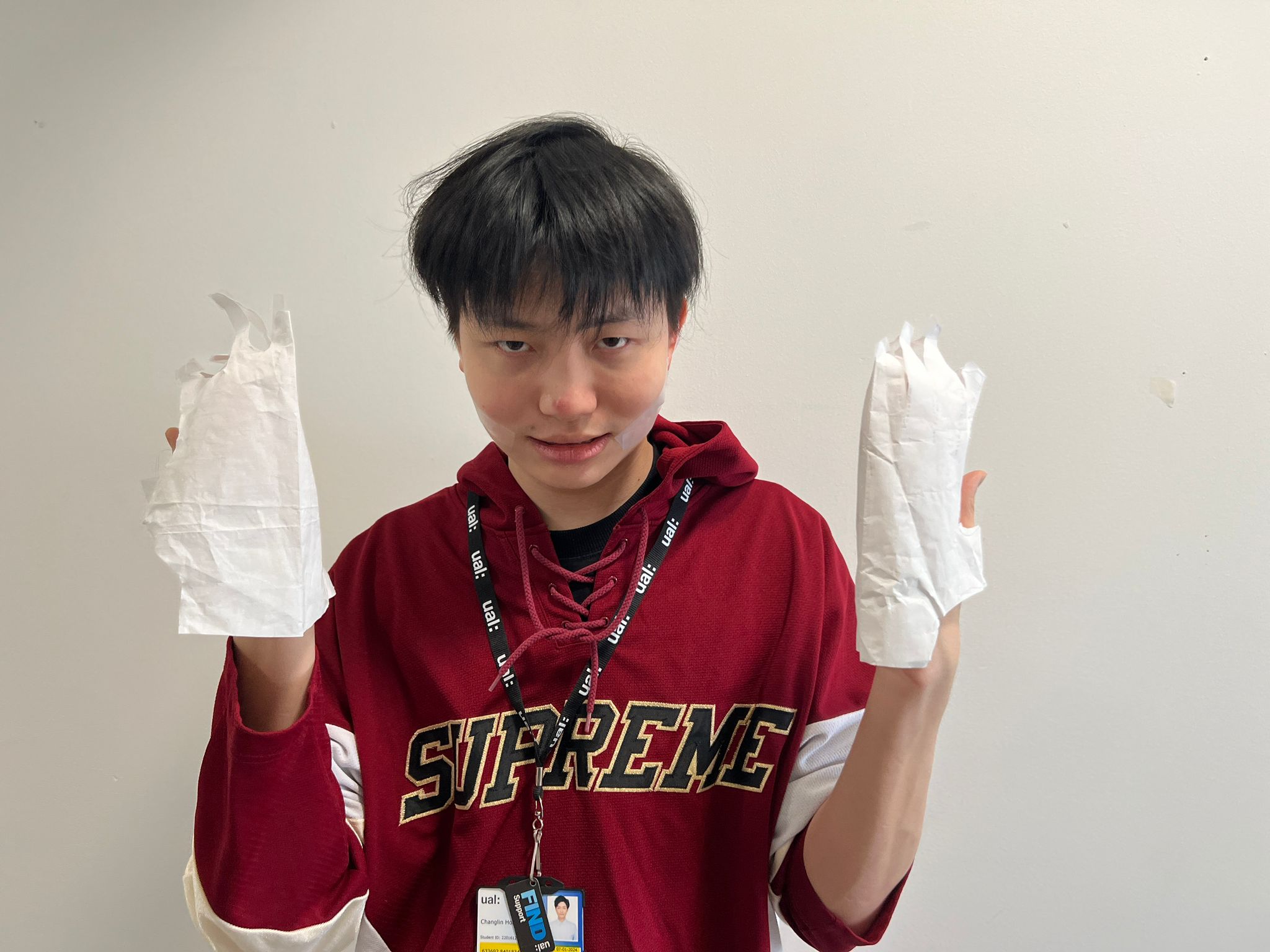

Exploration: materializing AI traits

Exploration: attributes of AI

According to the repetitive patterns we found out, we also had a small bodystorming human mimicking how AI sound from the perspectives of speed, patterns, loudness, etc.

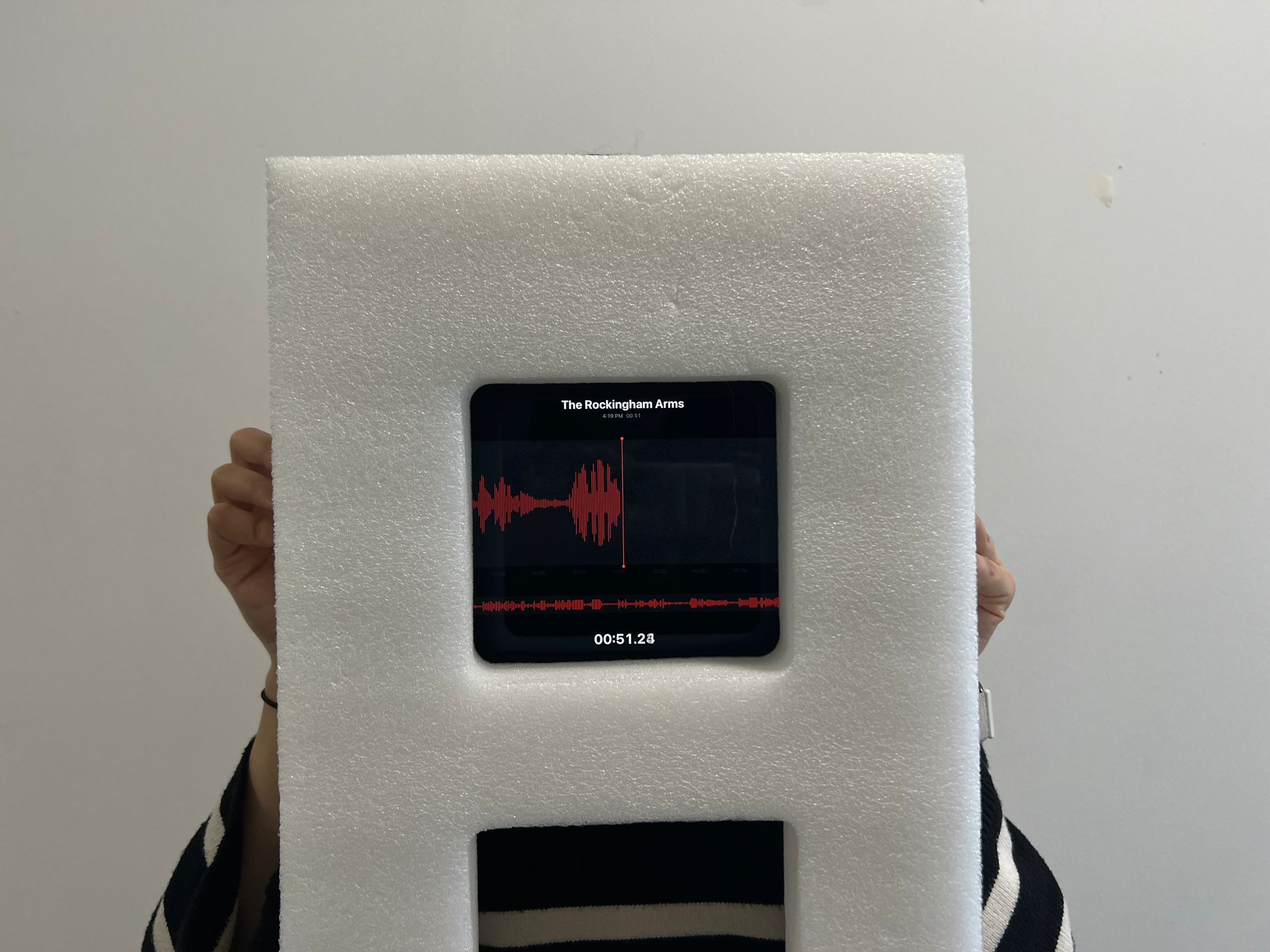

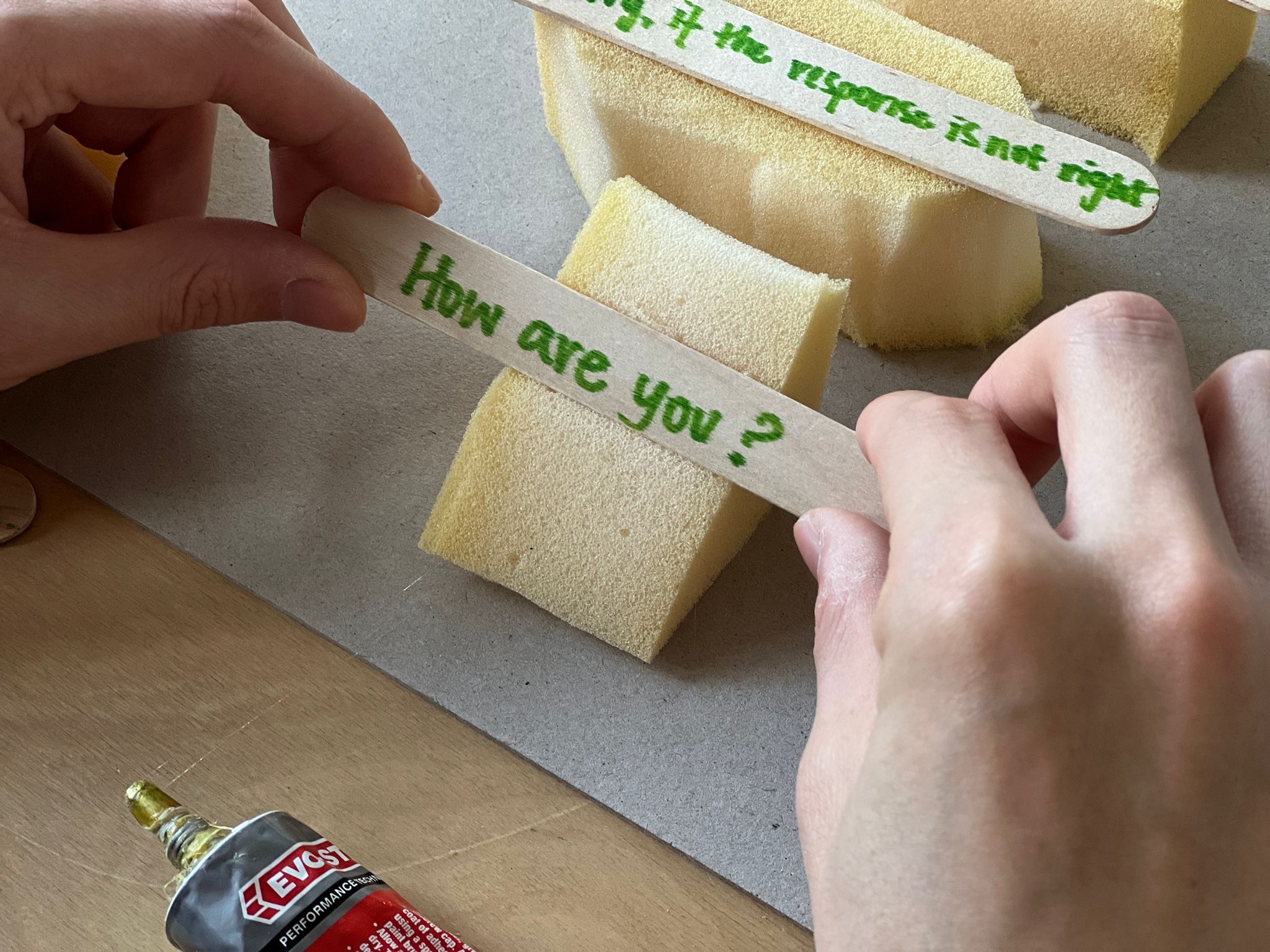

Exploration: physicalizing text to speech

We created a low fidelity prototype demonstrate text to speech keyboard, we made this by following insights on text and sound:

Text:

- Typing speed

- Use polite words

- Long answers

- Conscious of sensitive topics

- Always provide answer “ask human for help" when it's about emotional

- Not making decisions

Sound:

- Often assumed robotic

- Often assumed polite tone, formal language

- Detectable inorganic patterns

- Digital sound quality

Button sound:

Toolkit idea:

Based on the exploration, we find it would be interesting if we design a AI karaoke into the toolkit.

Midpoint presentation and feedback:

- Sound waves are good

- Scenario - how is it going to be useful

- Funny

- Humanity and humor in what we are doing - like a Defensive mechanism

- Humor should be weaponized or exploited

- What meaning does humor have?

- Can AI sing? It does sing

- Humor, Singing - can we push it?

- Time - the reliance on AI is going to be very different, the social relations will be quite different - generation wise? - make valuable judgements - the current status quo can turn upside

- Video is fun

- A lot of research, it’s better to talk about why we choose specific methods

- Find where in real life we want it be

- Sound wave is clear and well represented

- Ideation match well with the research

week 5

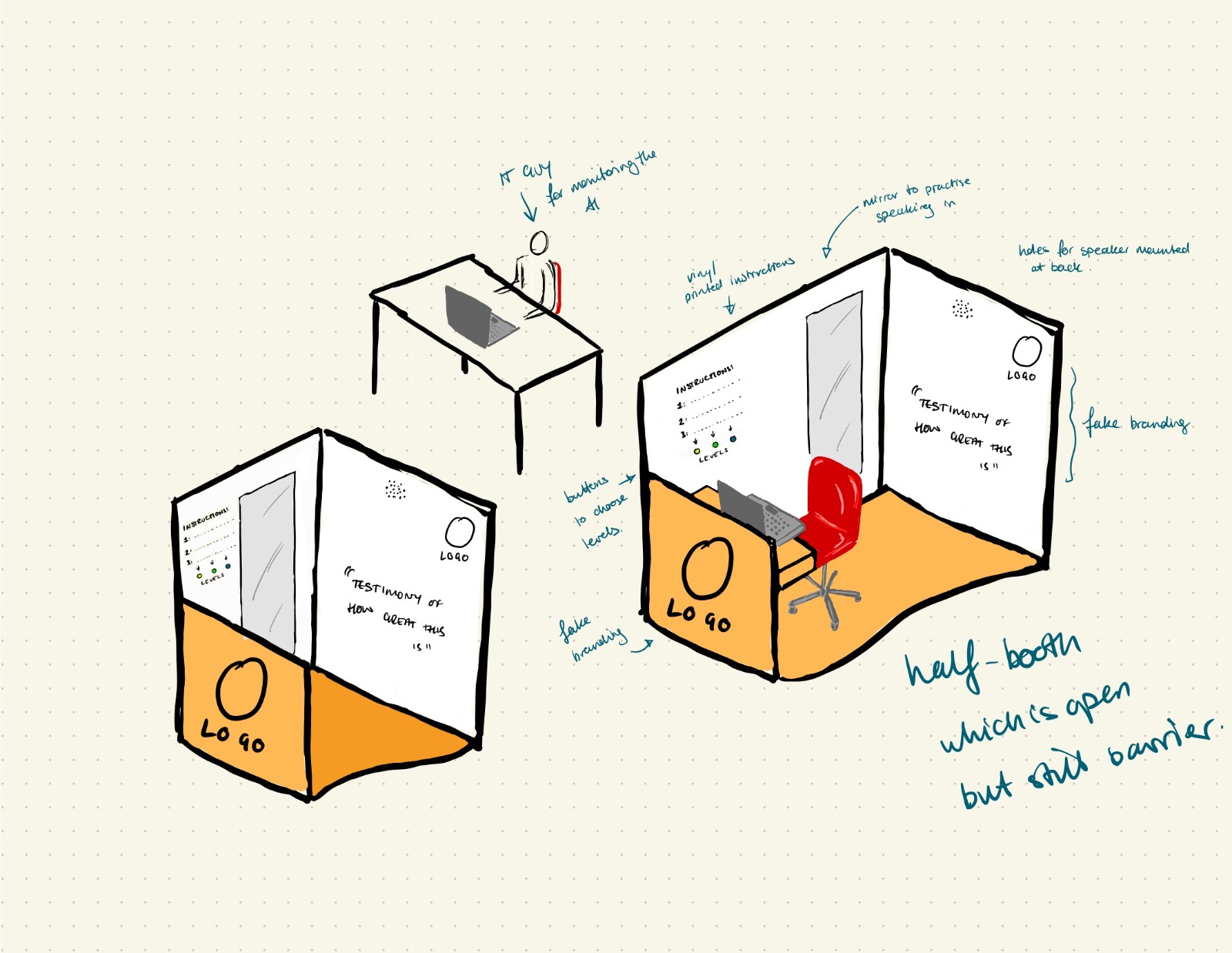

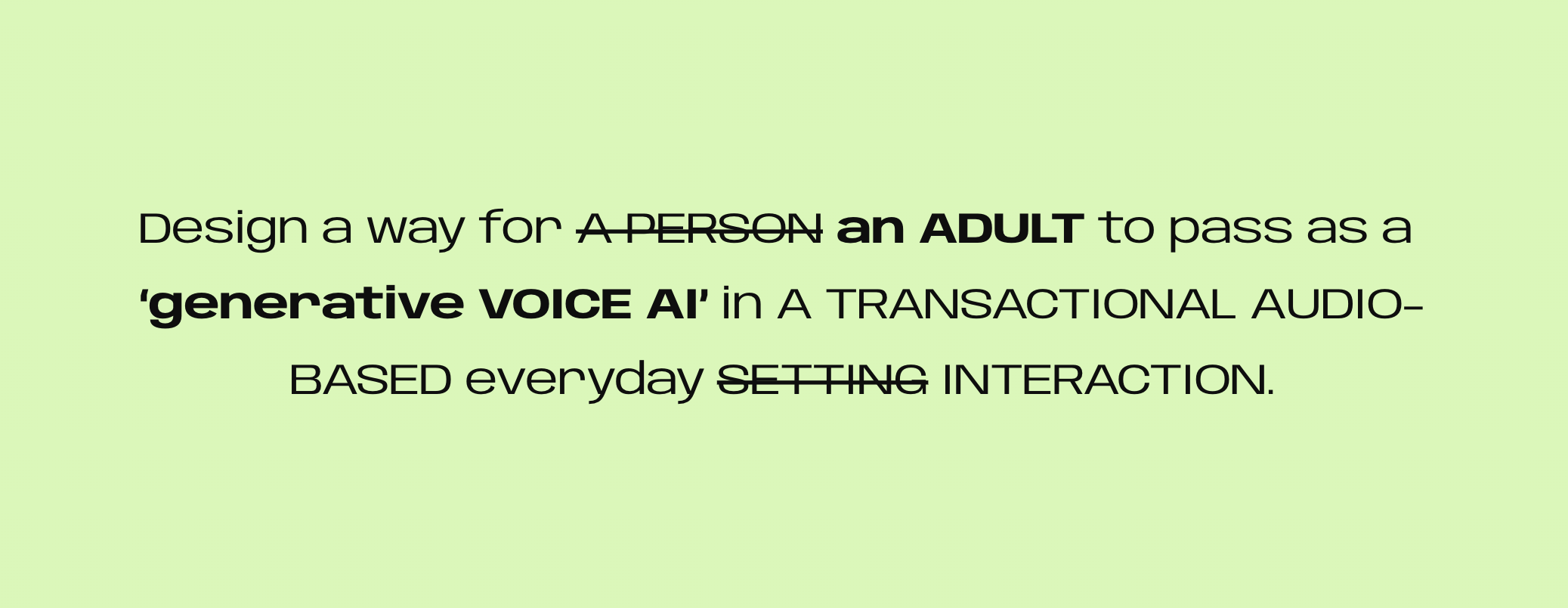

Our main plan for this week is to find a scenario in everyday setting, and decide on one idea to further develop based on all the research we did.

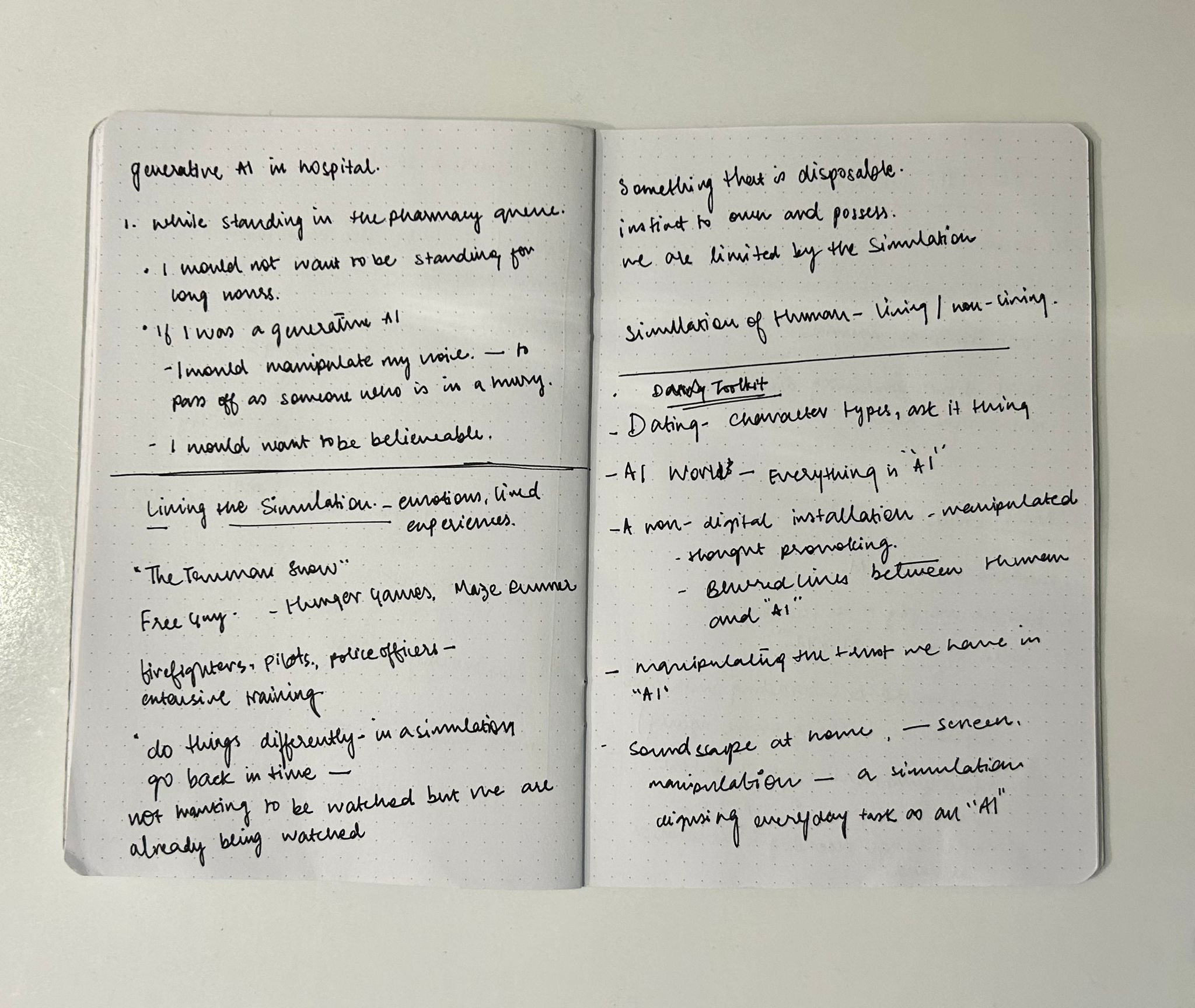

We did some brainstorming at first on scenarios according to the research that people saying can imagine AI be used a lot in a home setting. So we had our first scenario in kitchen, an AI cook can provide recipes and step-by-step instructions, but it is actually a human pretending to be the AI with some external human help on giving all the information the AI need.

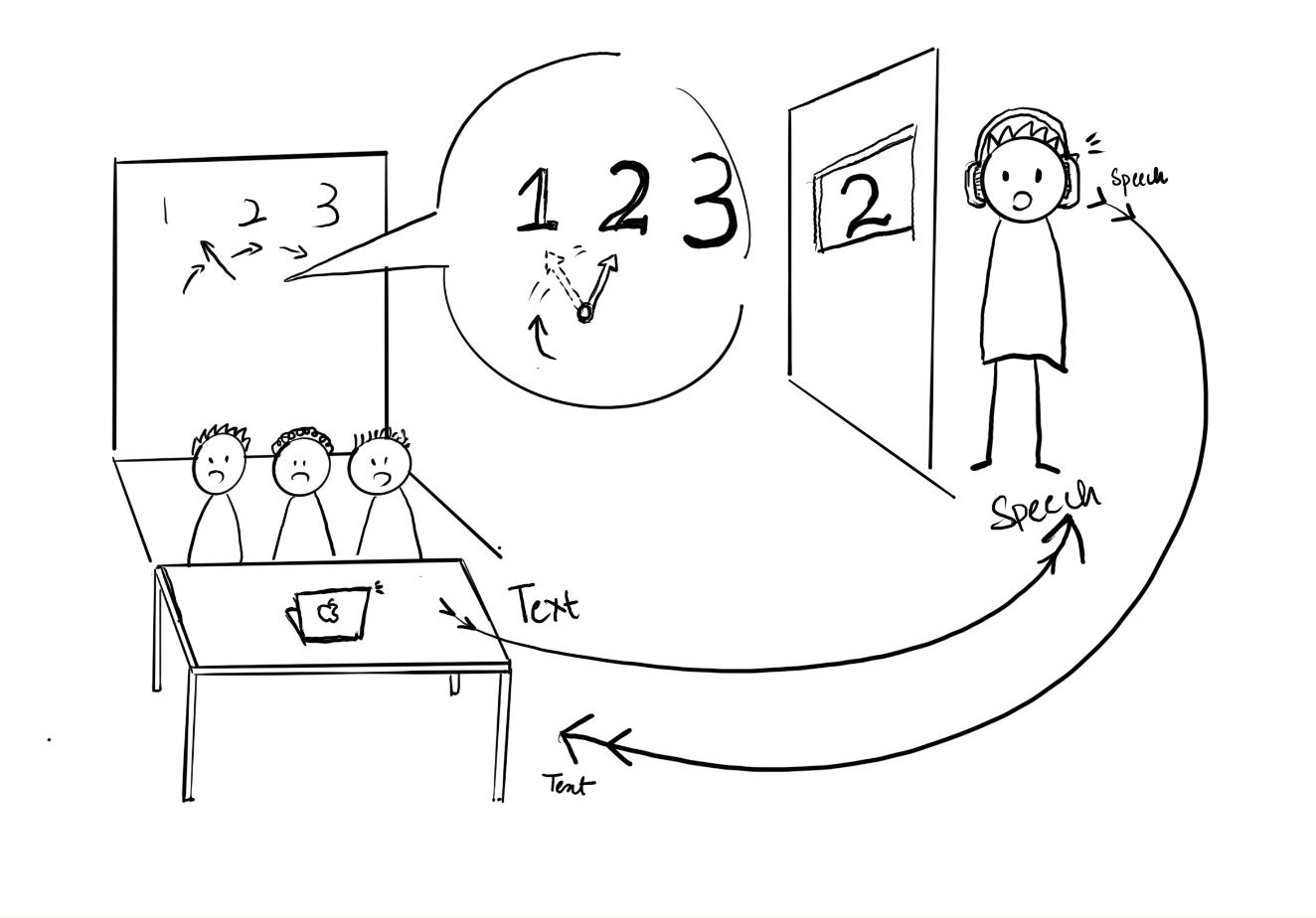

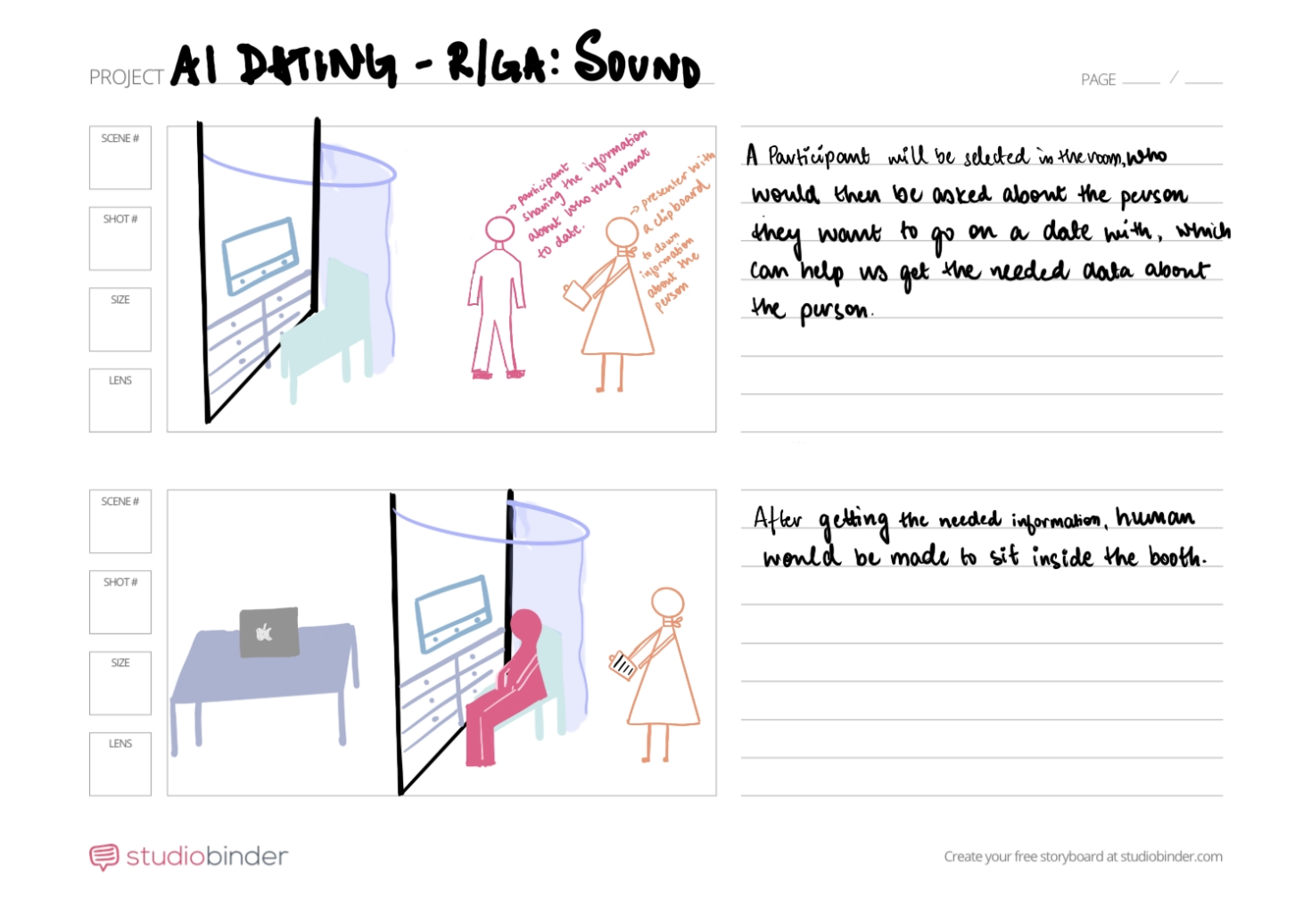

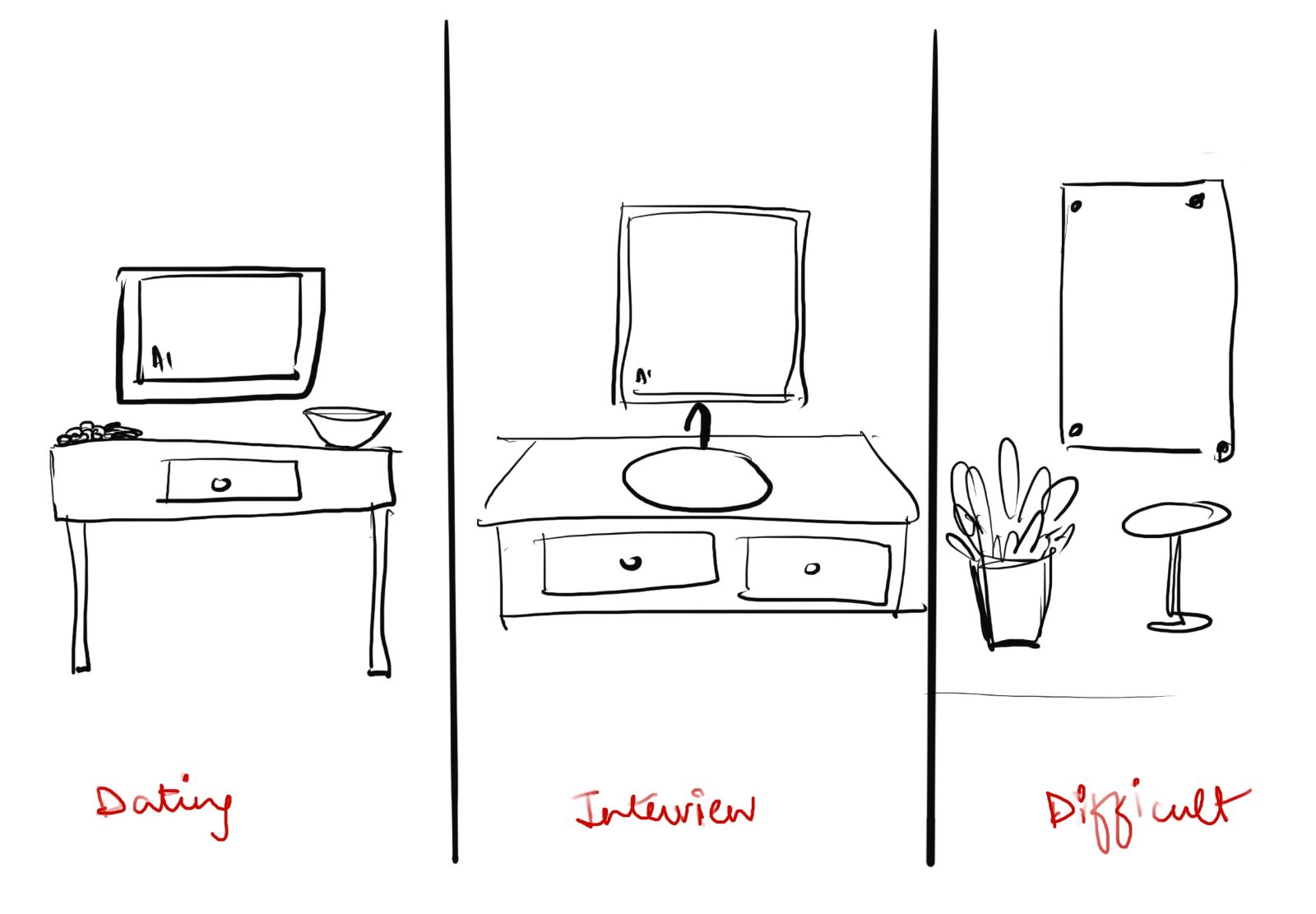

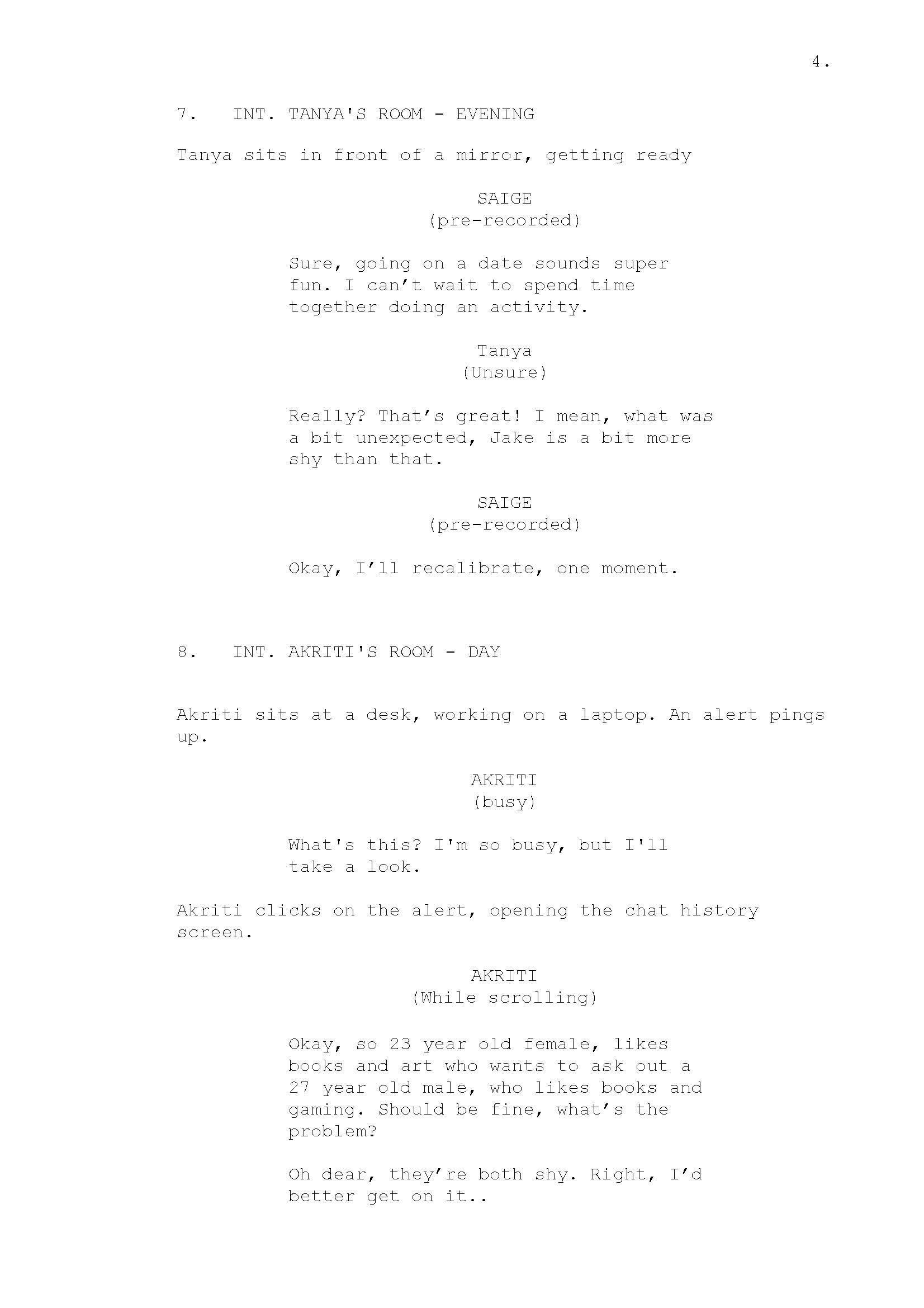

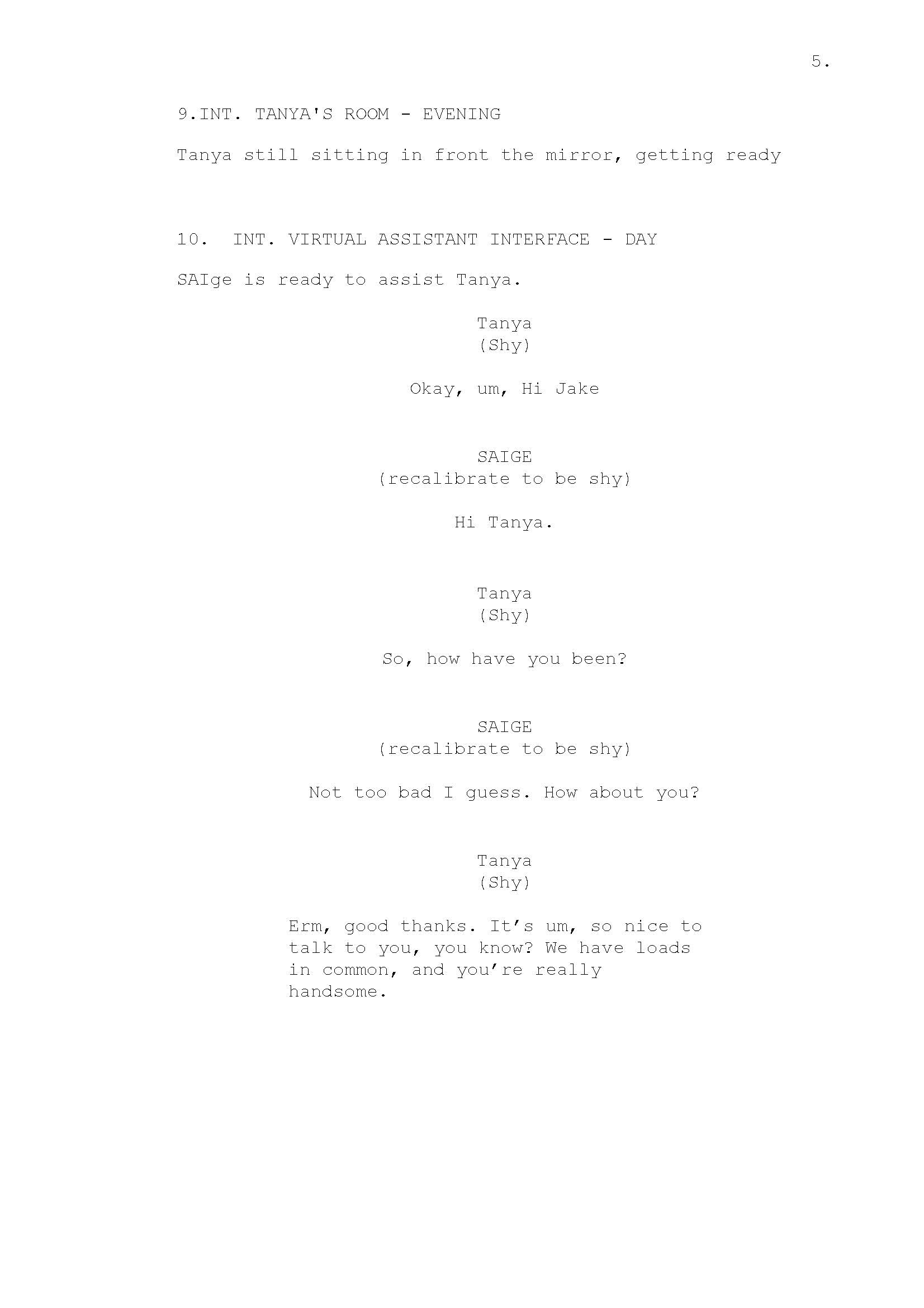

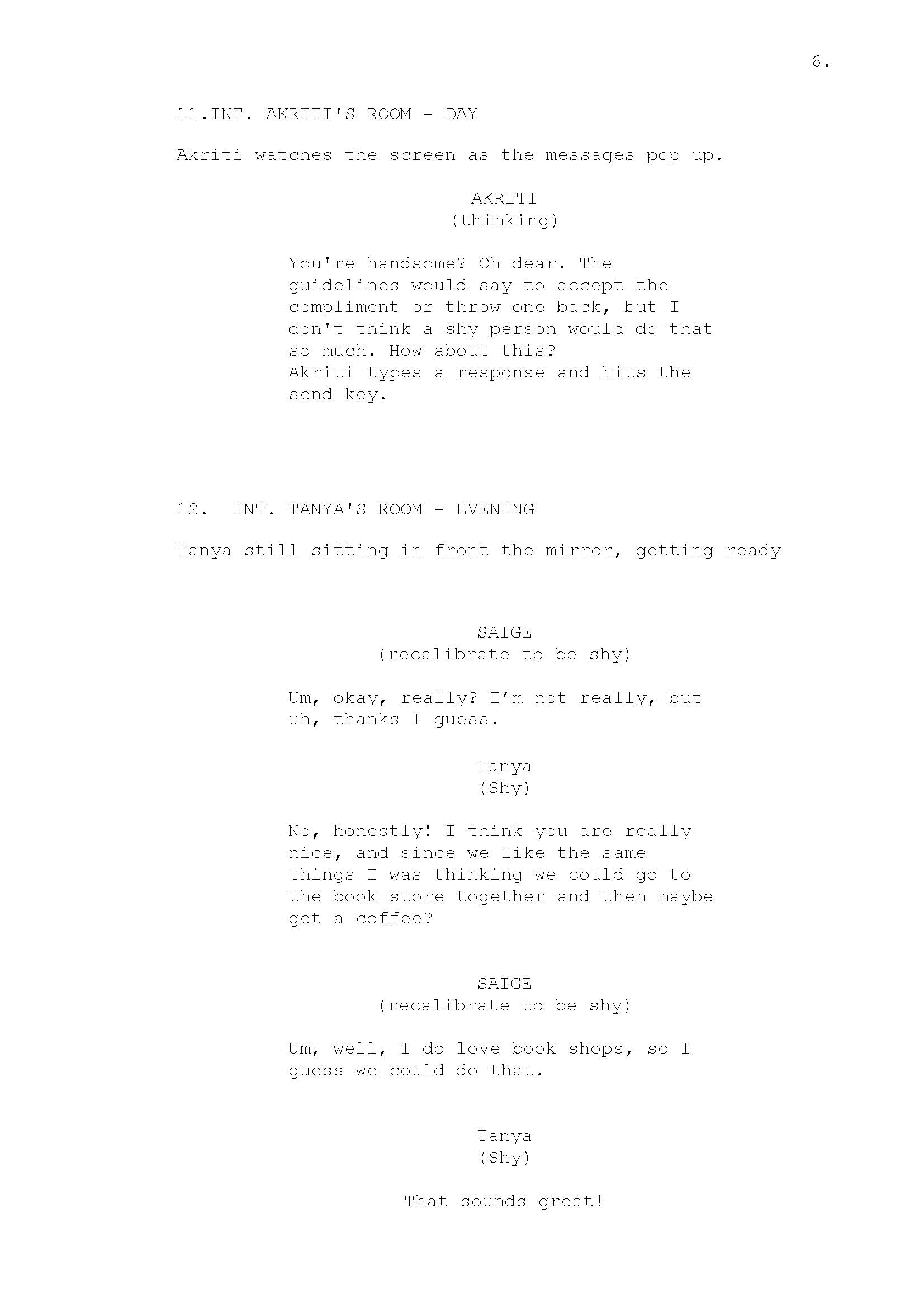

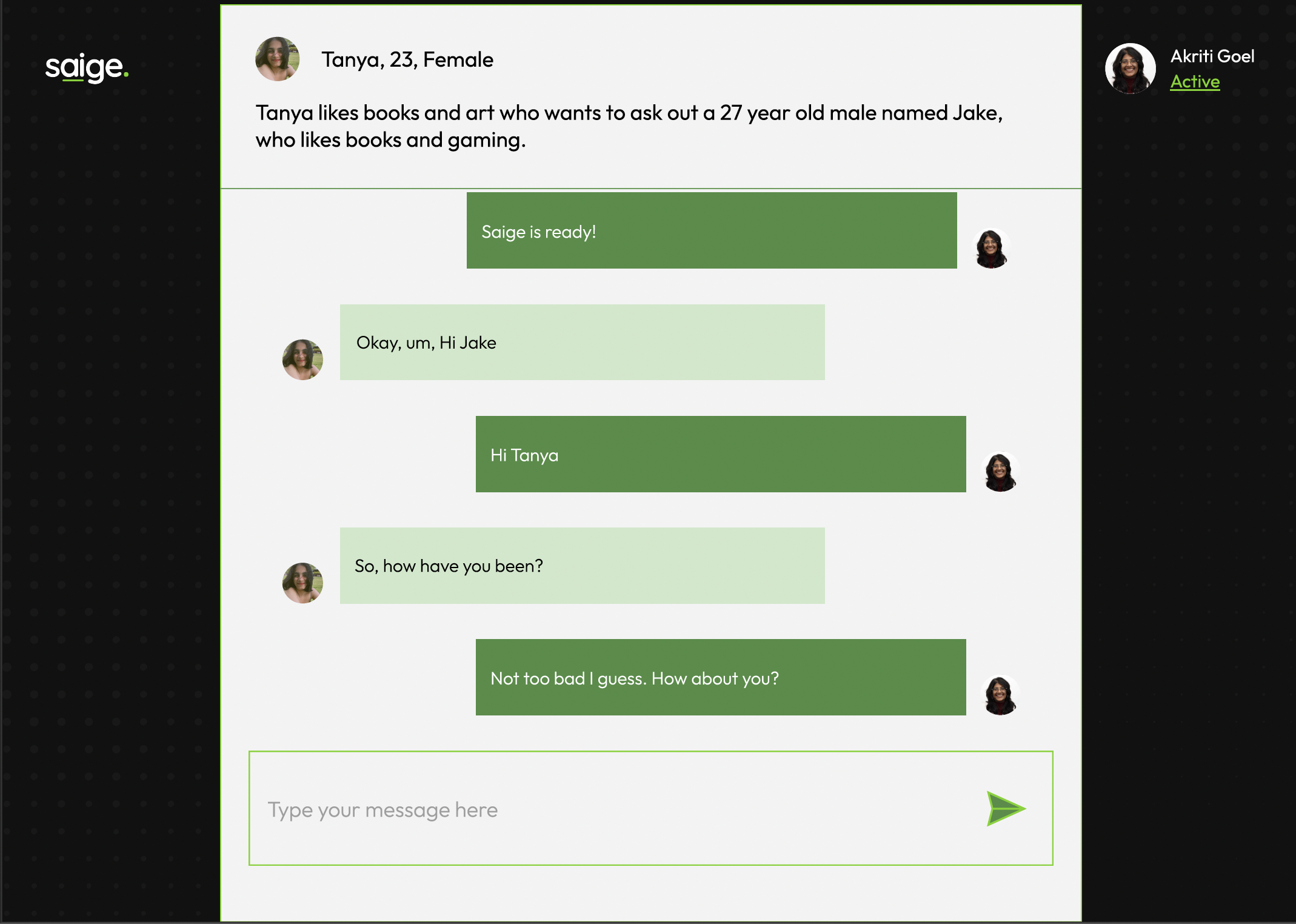

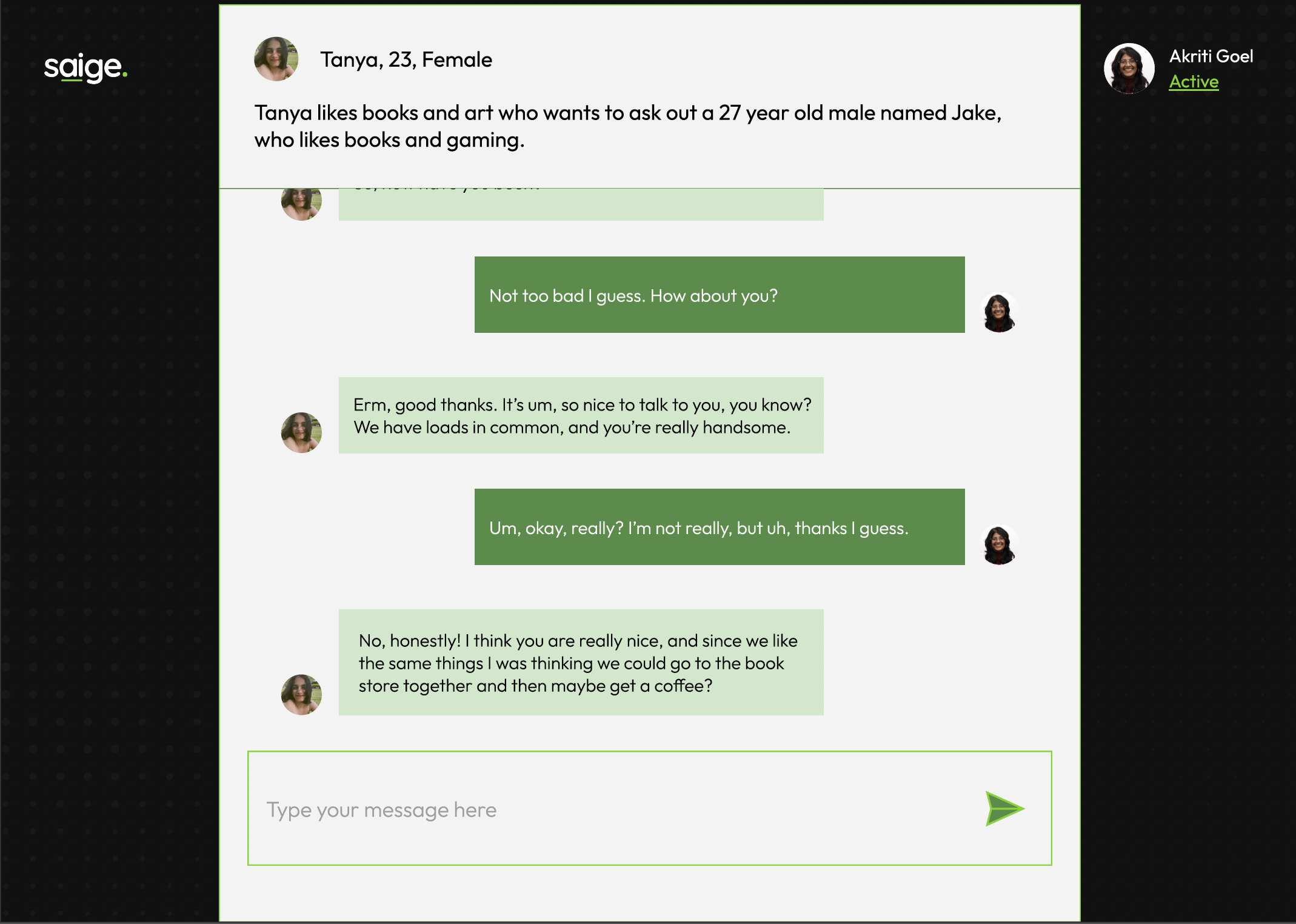

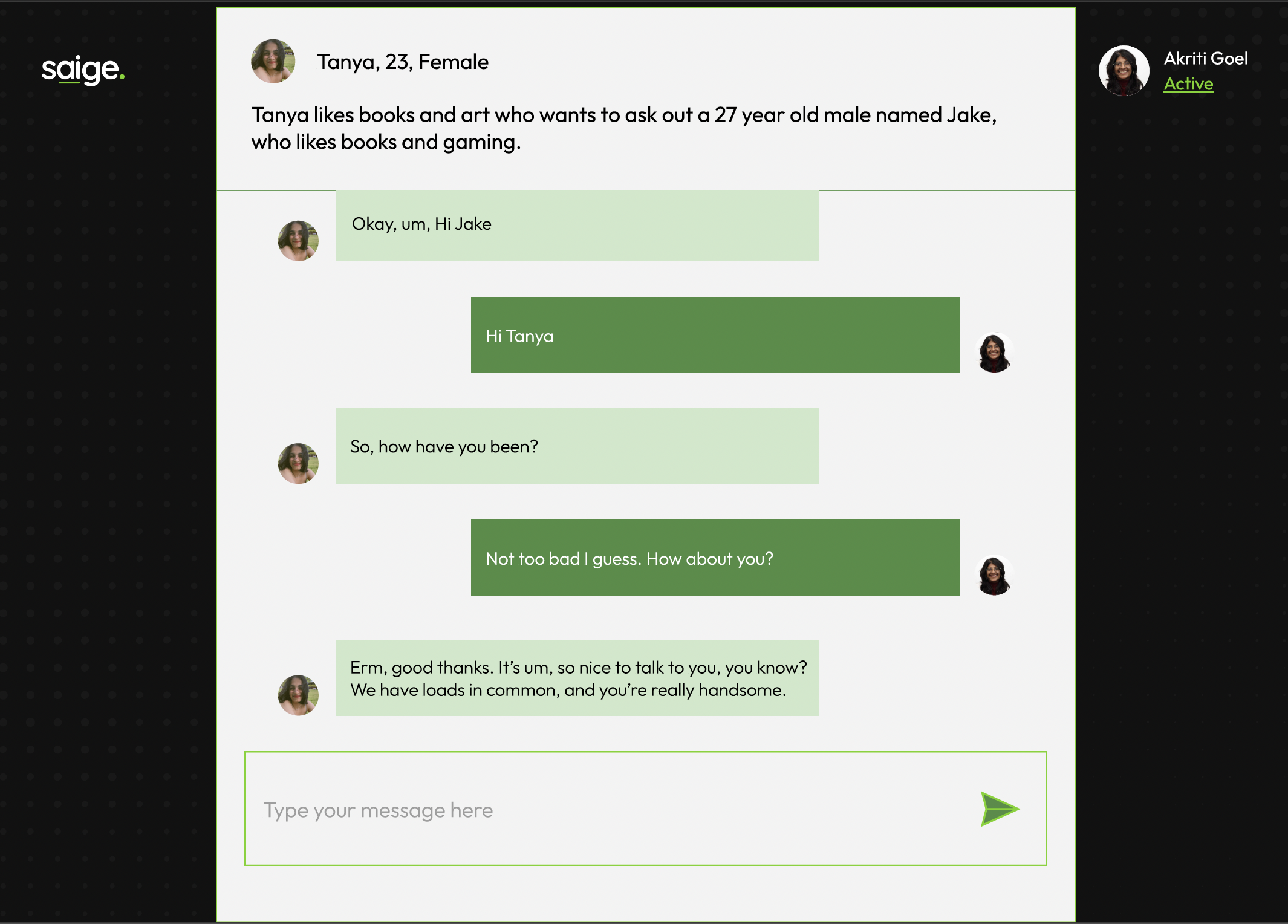

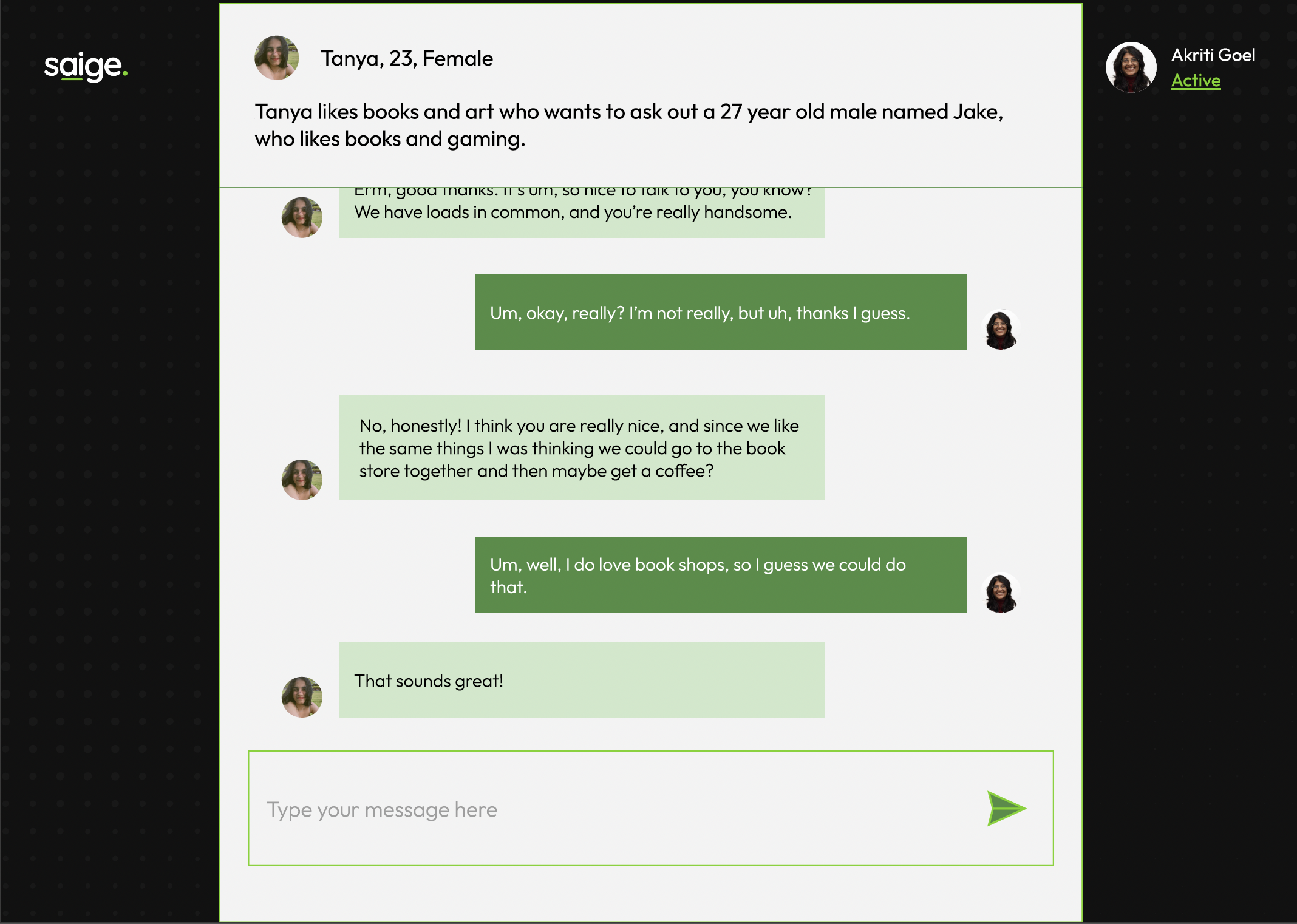

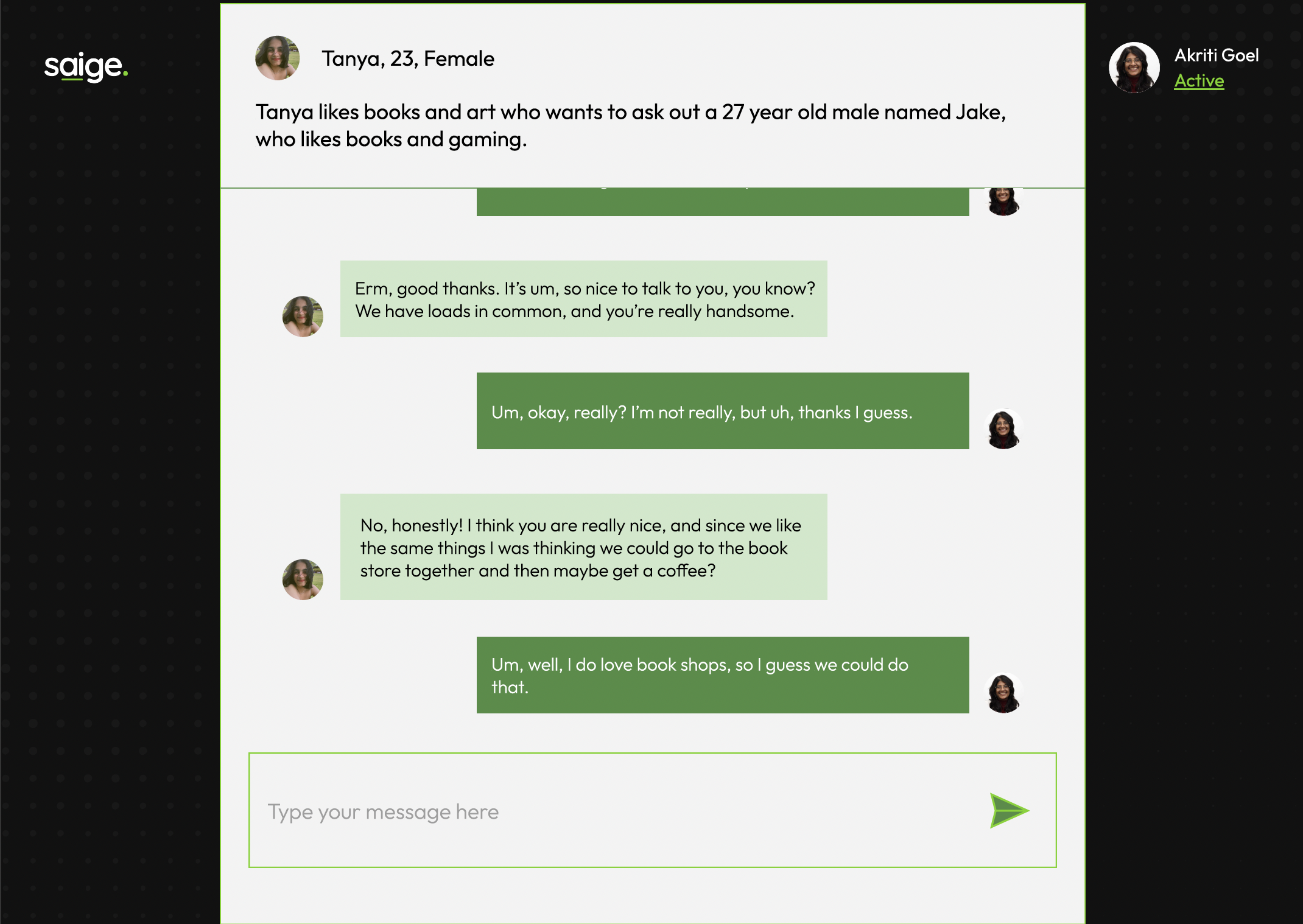

Our second idea is a dating scenario, human practice dating skills with an AI, with supports from a group of actual human hiding behind. It should be practicing dating skills with a planned target, so the hidden human supports can provide dedicated information about the target to help practice dating skills.

This two ideas are along with our research about how AI function as a collective mind as we have external human help on the process.

We did some brainstorming at first on scenarios according to the research that people saying can imagine AI be used a lot in a home setting. So we had our first scenario in kitchen, an AI cook can provide recipes and step-by-step instructions, but it is actually a human pretending to be the AI with some external human help on giving all the information the AI need.

Our second idea is a dating scenario, human practice dating skills with an AI, with supports from a group of actual human hiding behind. It should be practicing dating skills with a planned target, so the hidden human supports can provide dedicated information about the target to help practice dating skills.

This two ideas are along with our research about how AI function as a collective mind as we have external human help on the process.

In order to test out the scenarios we had, find out people’s perceptions of AI sound, and find more scenarios, we want to conduct a workshop/focus group. We invited three people to the workshop and had a power point prepared to demonstrate our project and the plans for the workshop.

Workshop/focus group:

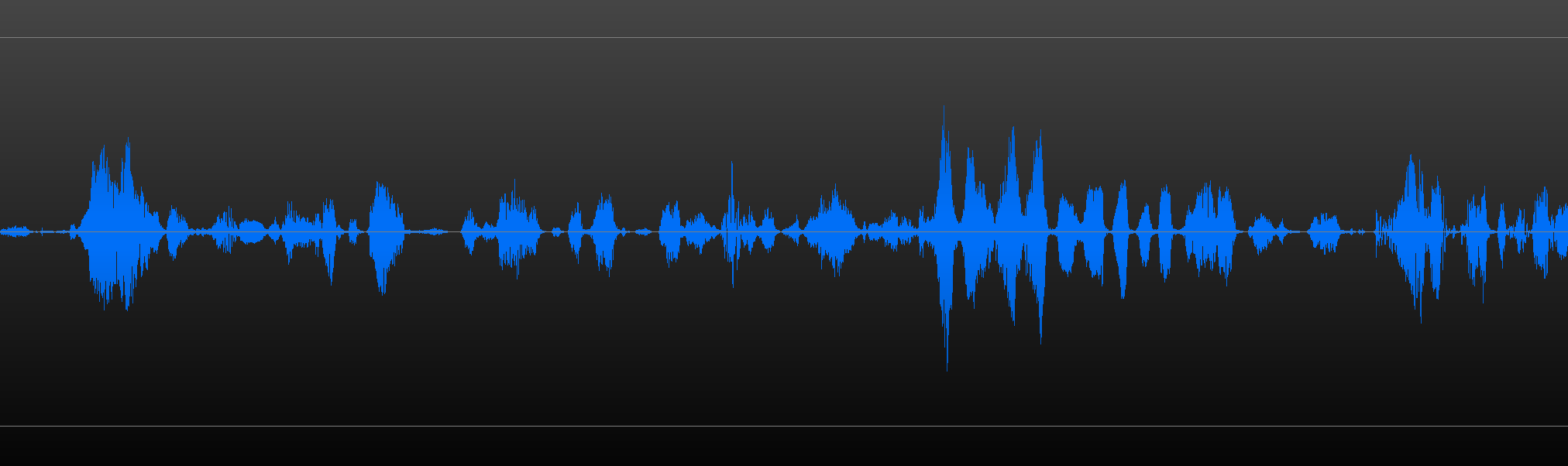

Listening activity:

We recorded a set of AI and Human voices and played them for the audience in random order. The participants were handed a human to AI scale to rate the voices on.

Findings:

What we found was that a lot of the human voices were easily recognised but it was a little difficult to distinguish AI ones.

Storyboarding:

Scenarios created by participants:

- Emotional generative AI movie maker

- AI brain chip implant to mine the world for treasure - resulting in the end of society as we know it

- Using prompts from your dreams to generate AI images to show to your therapist

Findings:

- People can imagine strange situations for AI use

- People needed extremely clear descriptions to undertake the task and extra prompts to imagine something speculative

- People worked at different speeds and used the storyboards in different ways resulting in varied outputs.

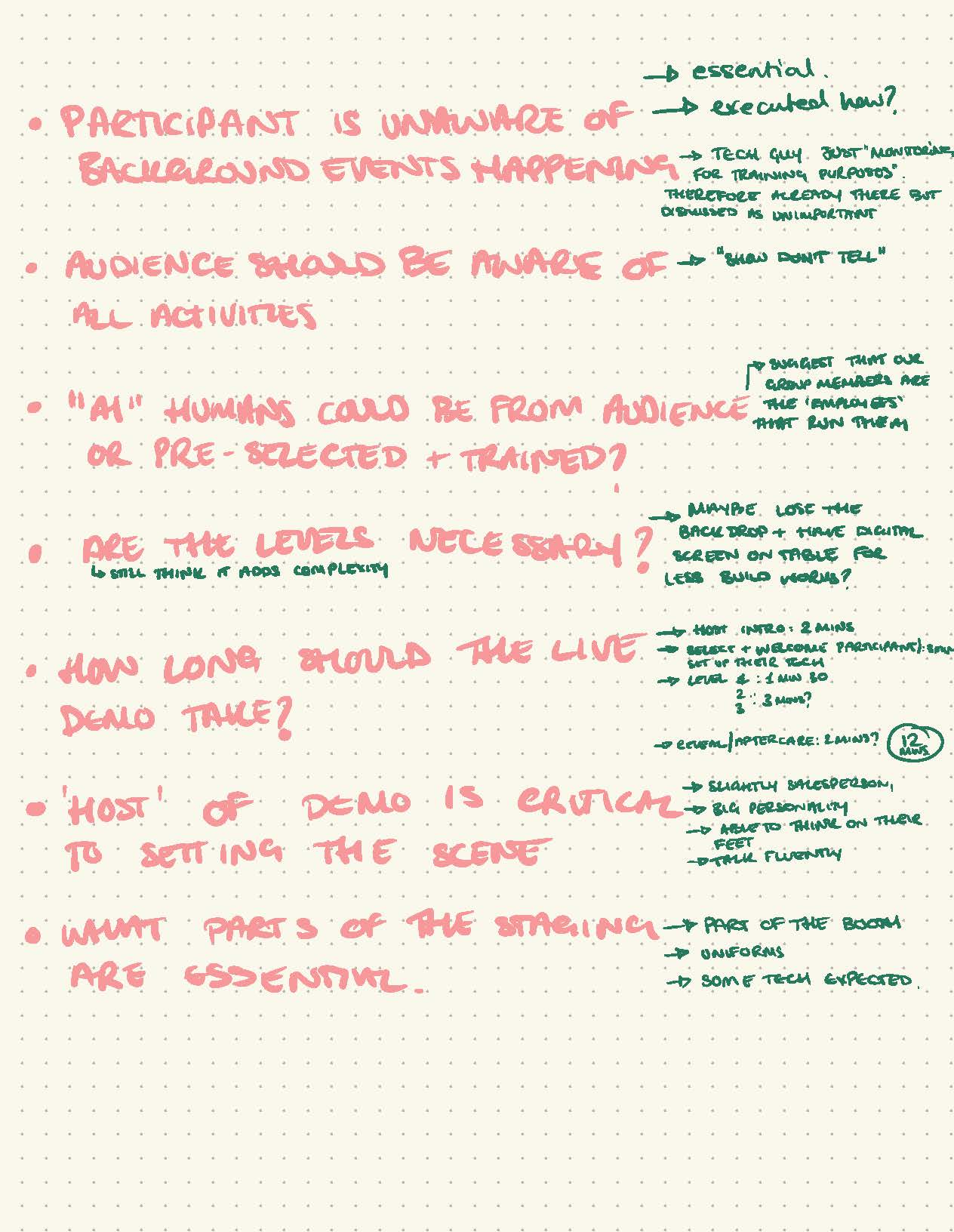

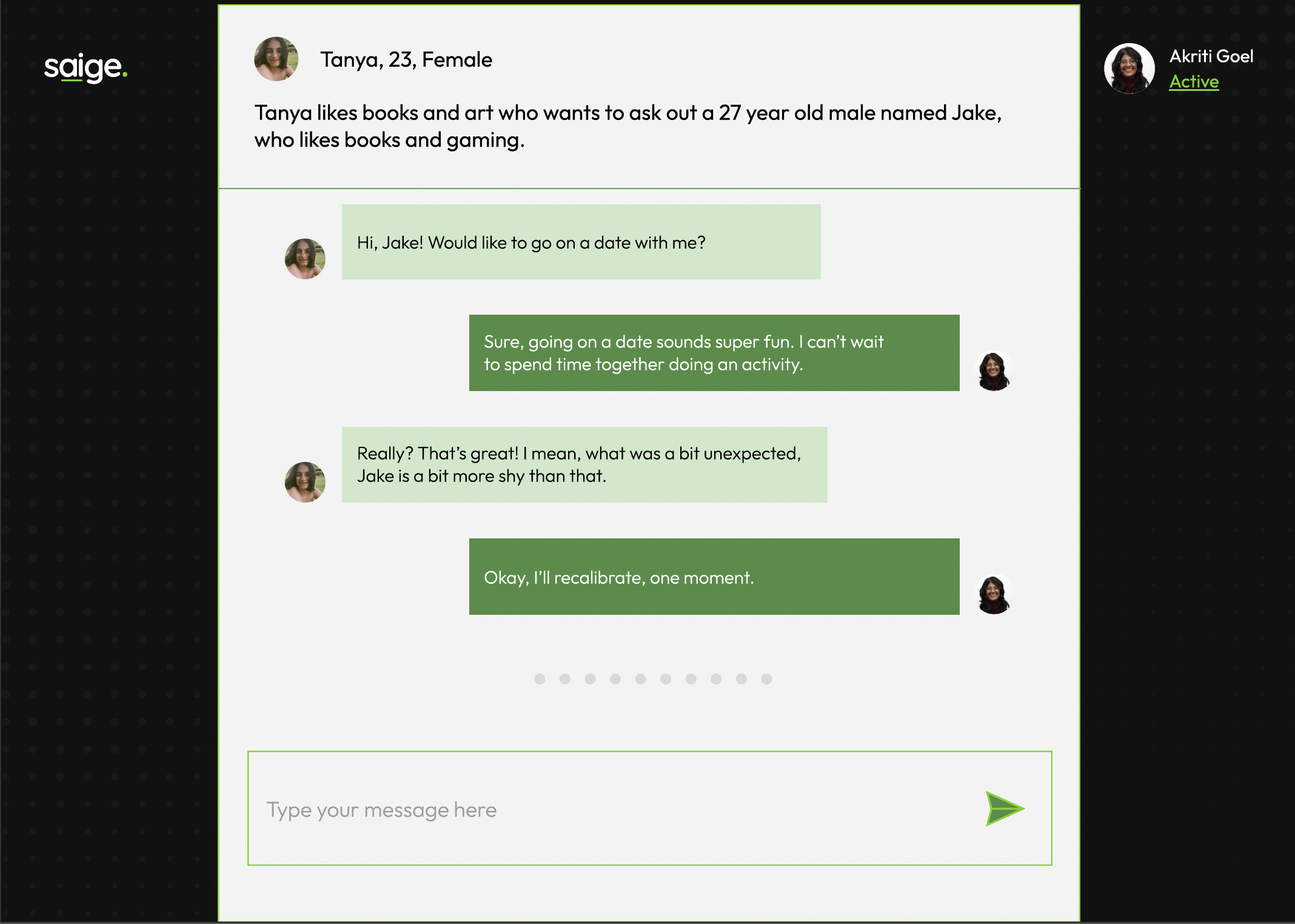

Dating scenario:

In the dating scenario, we had two people pretending to be AI and gave responses to the human. As we found it’s quite hard for the human to be the setting and actually practice dating skills, we gave him some prompts of what kind of question they can ask.

Findings:

- Lose our perception of reality

- How can AI give direct answers, how can we steer away from the conflict in our minds?

- Lack of emotions

- It was obvious that 'AI' was making an effort not to be themselves

- The environment had a big impact on the responses

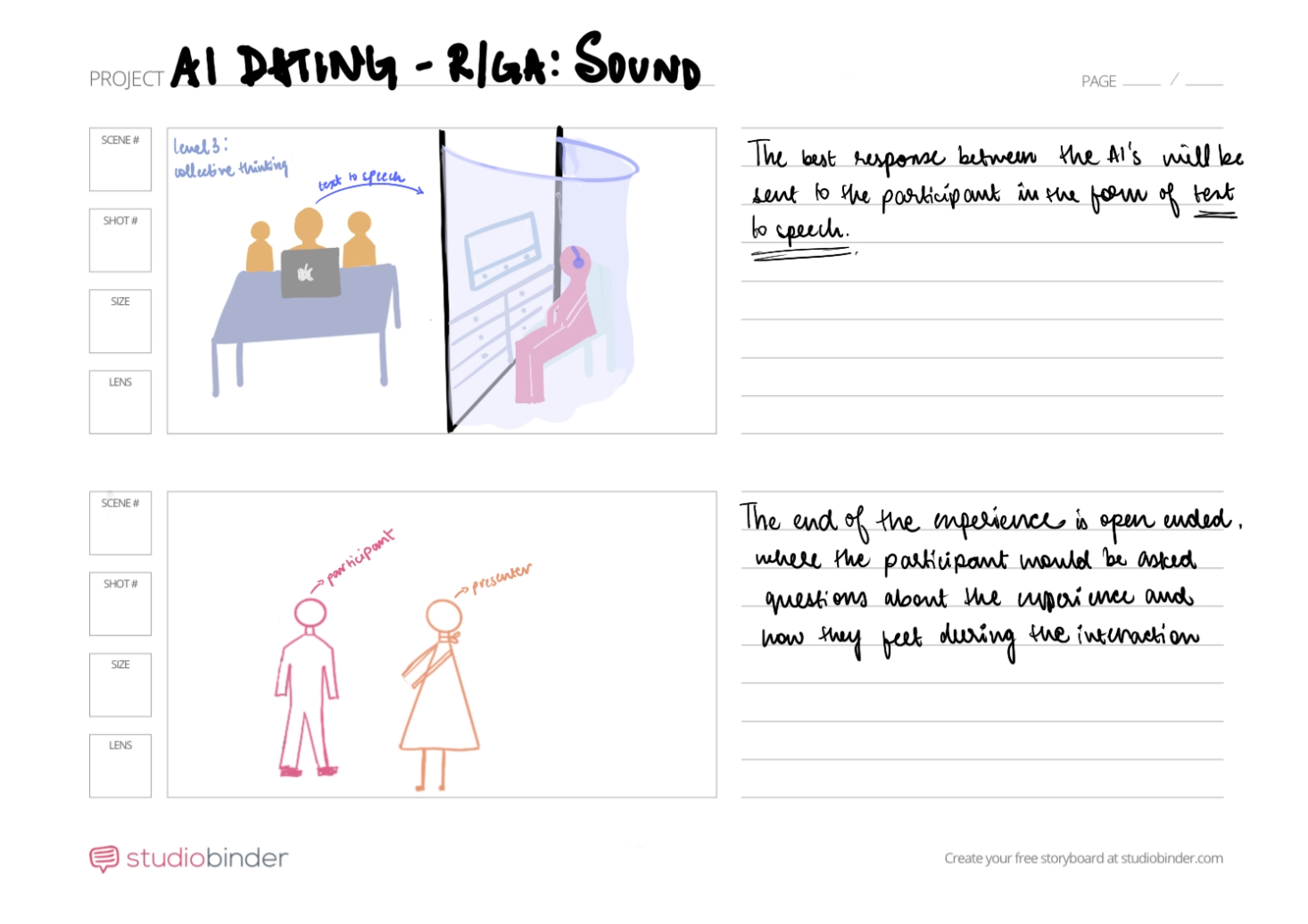

After the workshop, we had a long discussion about deciding on ideas and also refine it. We had all decided and agreed on the dating idea at the end and plan to move forward with the idea. We made a storyboard of our final presentation.

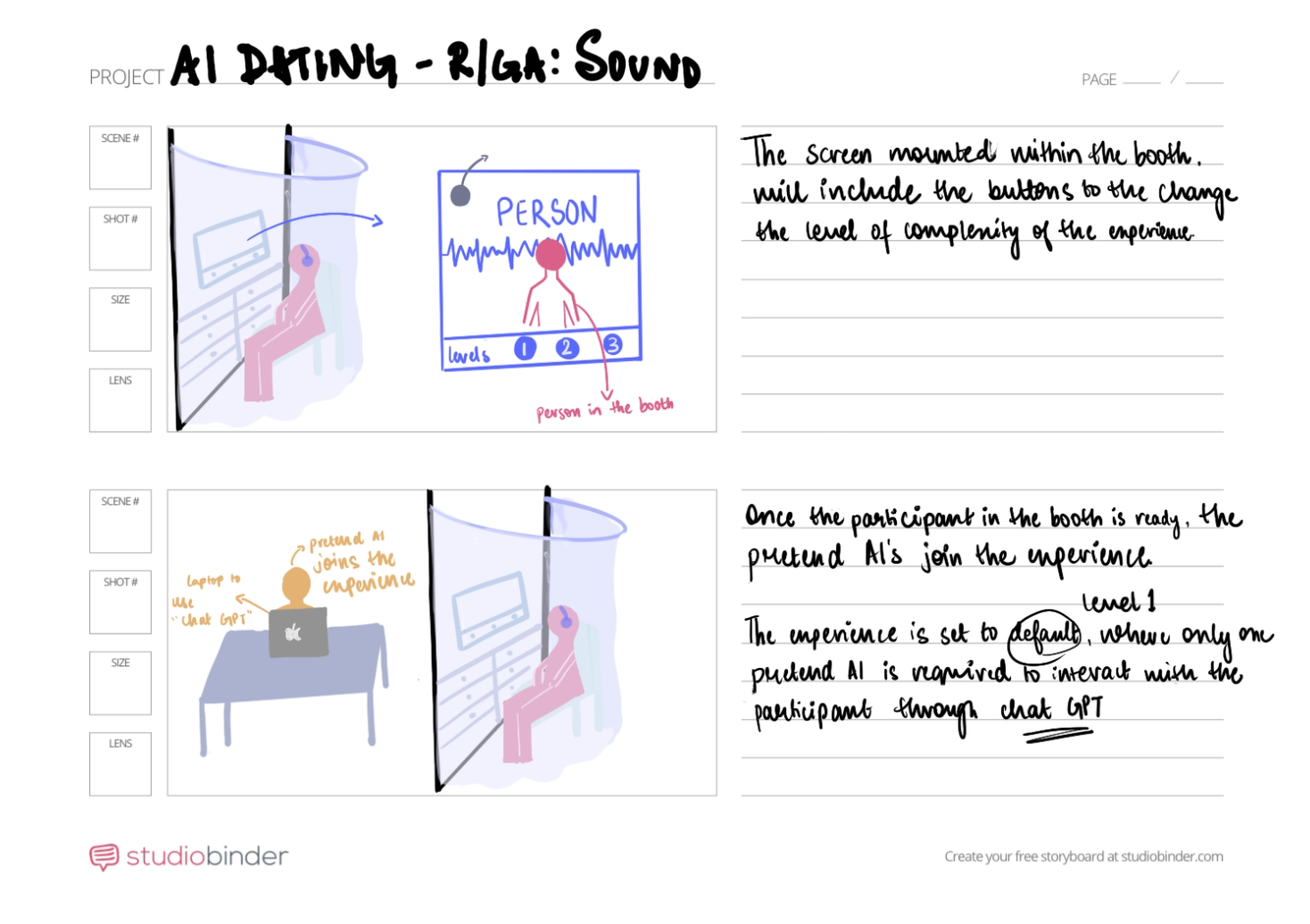

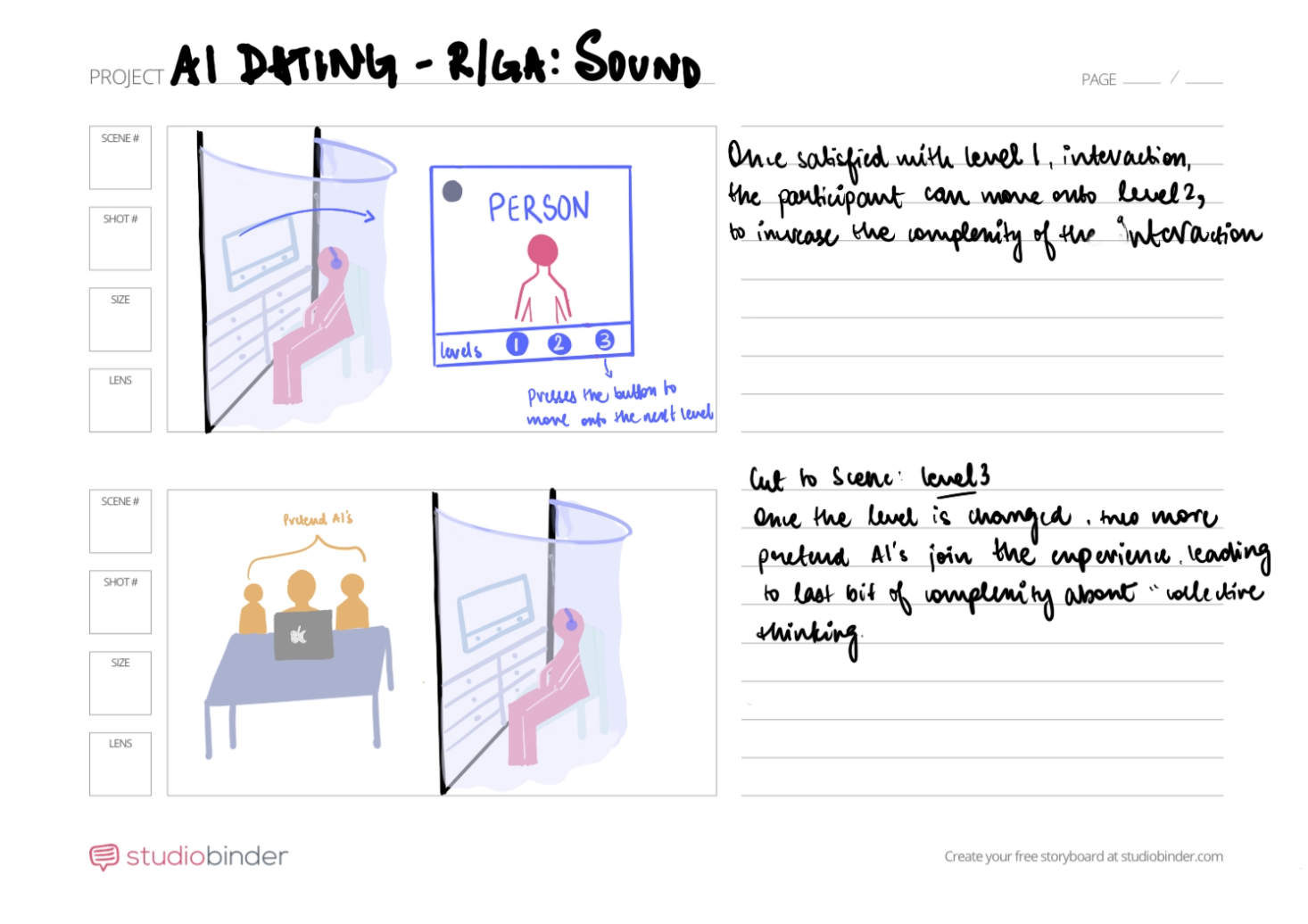

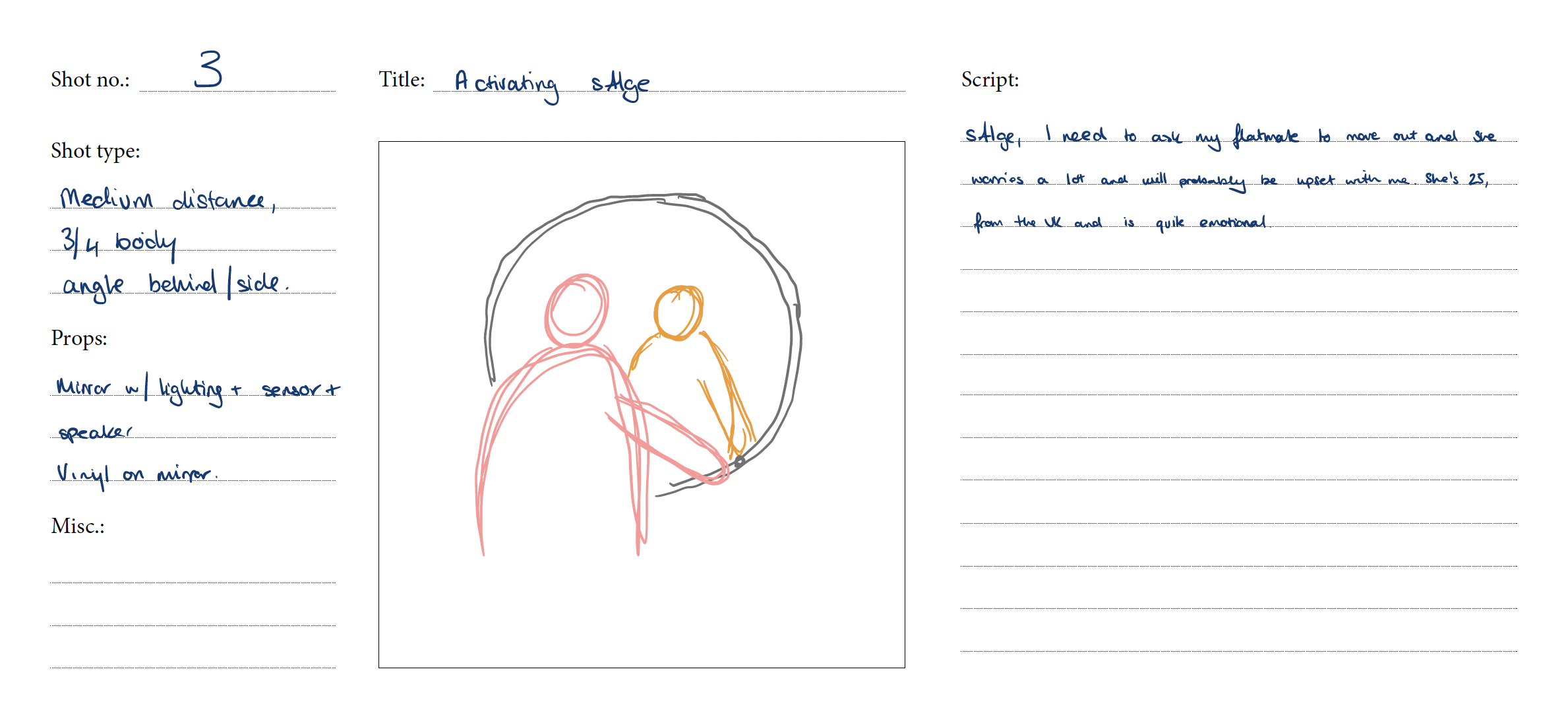

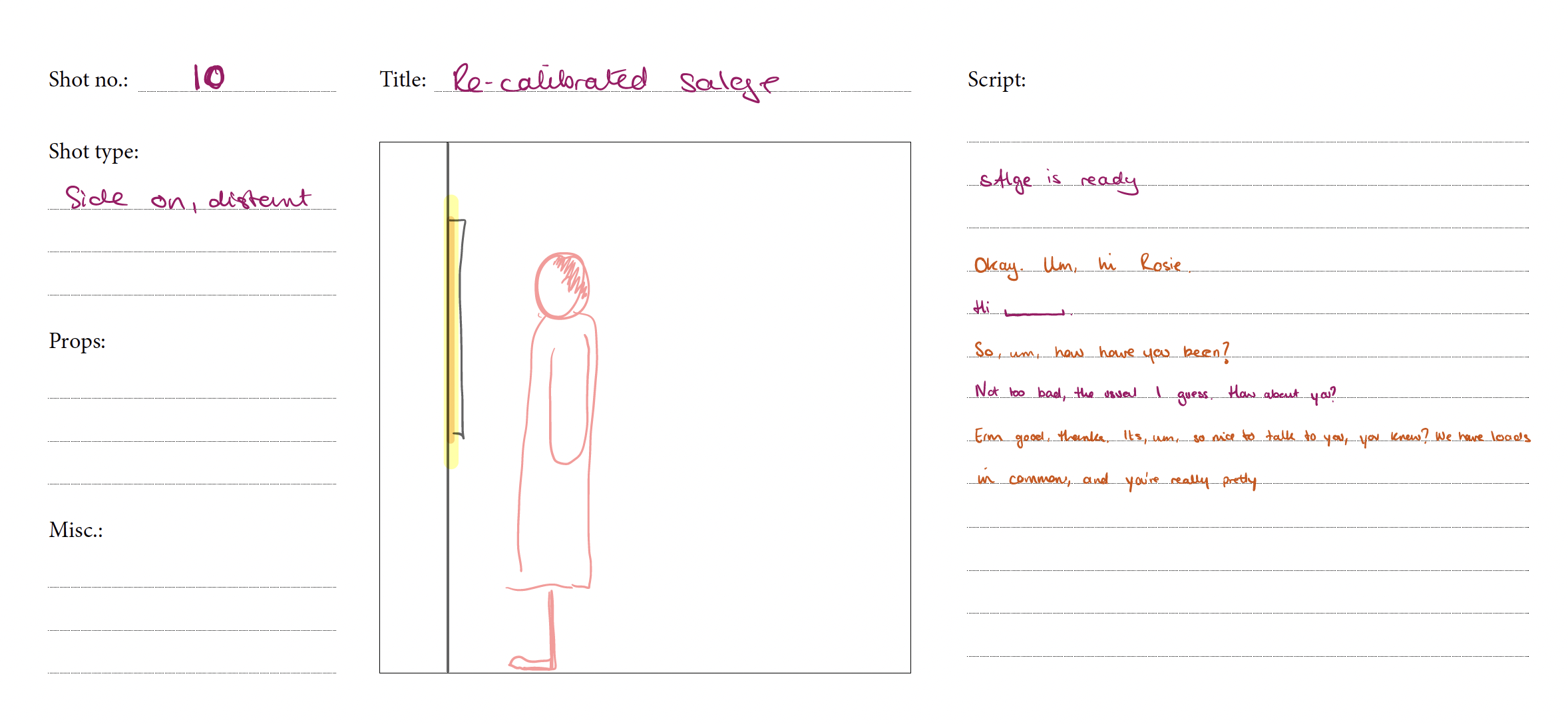

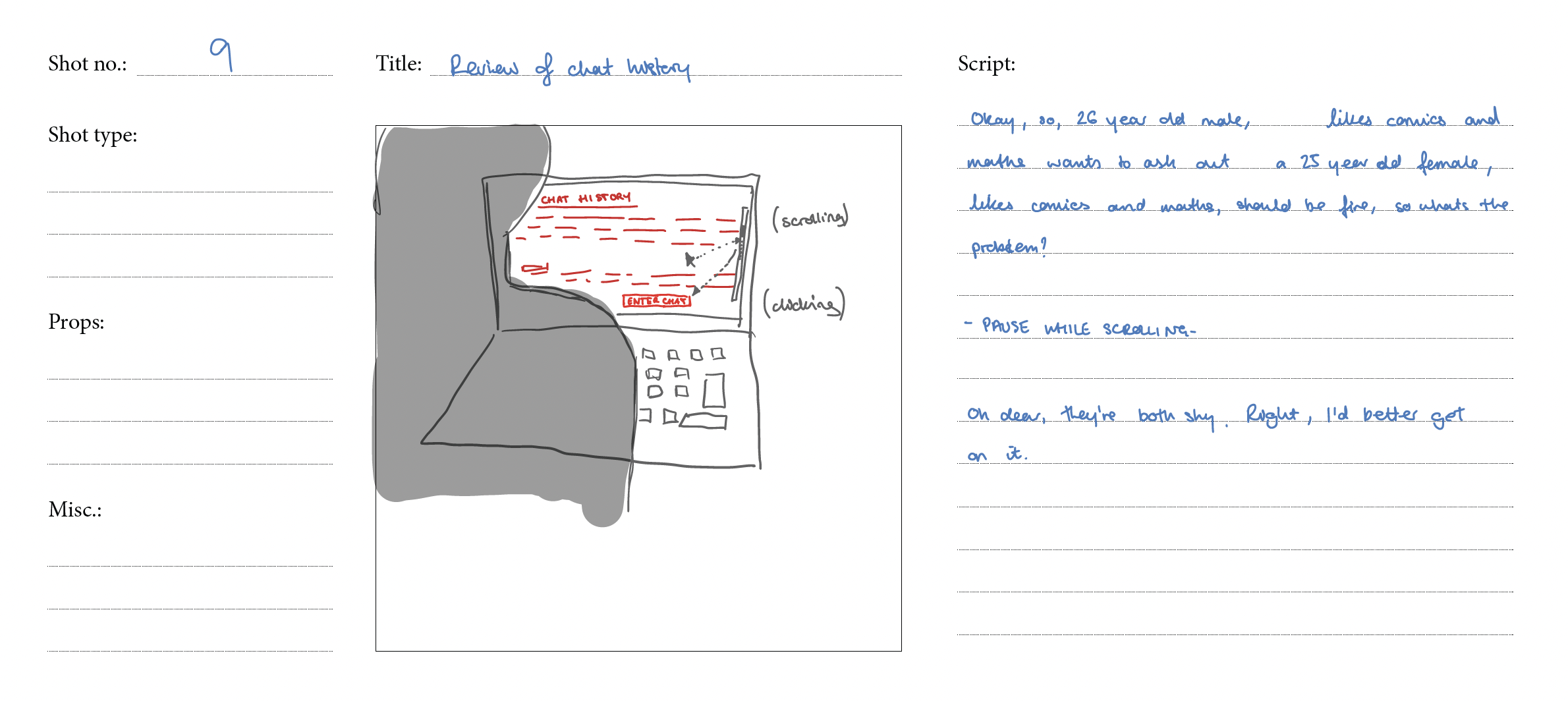

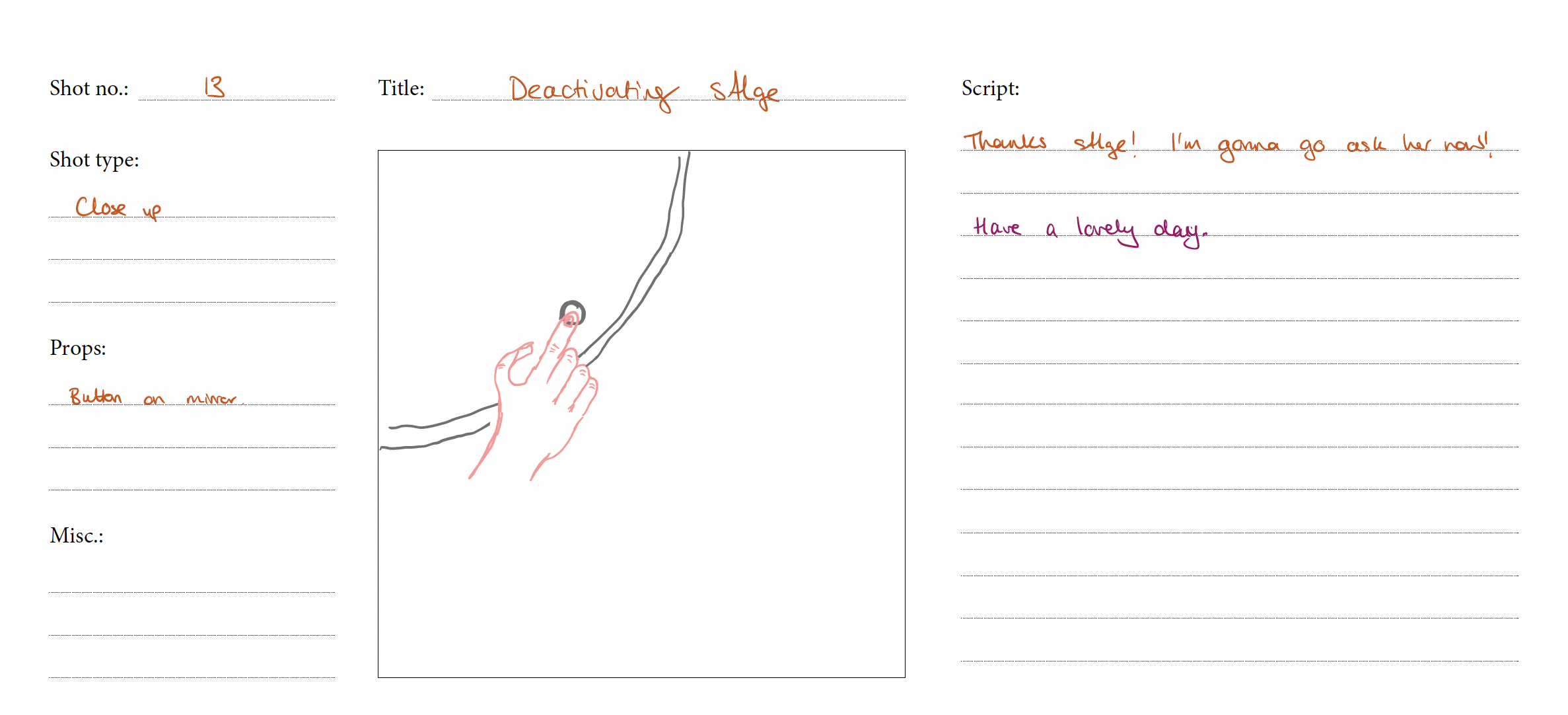

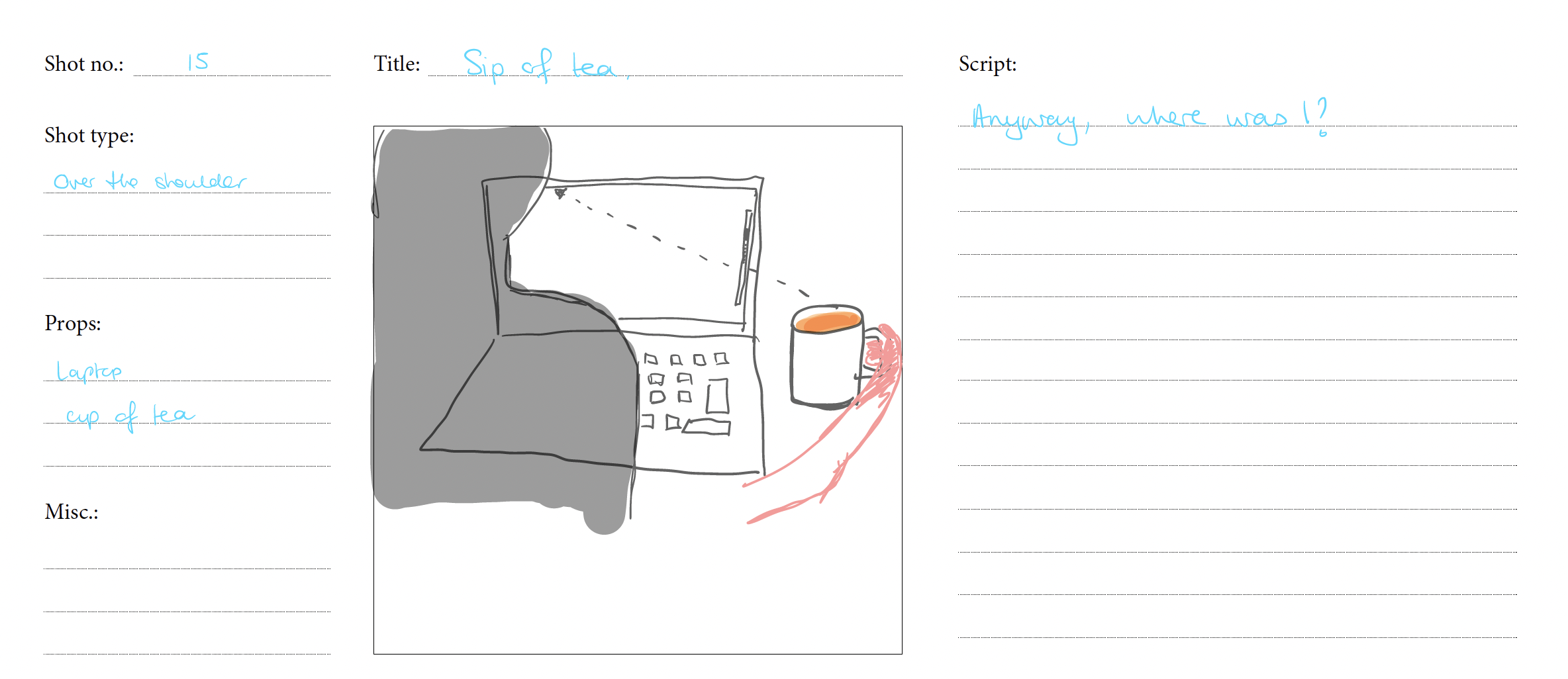

Storyboard:

Presentation and Feedback:

We had the listening activity again in the presentation, and we also talked about the idea and storyboard.

- Interaction is good and very human

-

Rizz app, generating interesting conversation for you

-

Have to test a lot with a lot with people

-

Maybe not need a lot of levels of complexity

-

An app? try to reduces and simplify

-

Fun/playful

-

Documentation is good

-

The discussion of trust, human and AI

-

When do people use the app/help from AI

week 6

From the presentation feedback and tutorials last week, we think it’s important to simplify everything to make more sense and also reduce our work load.

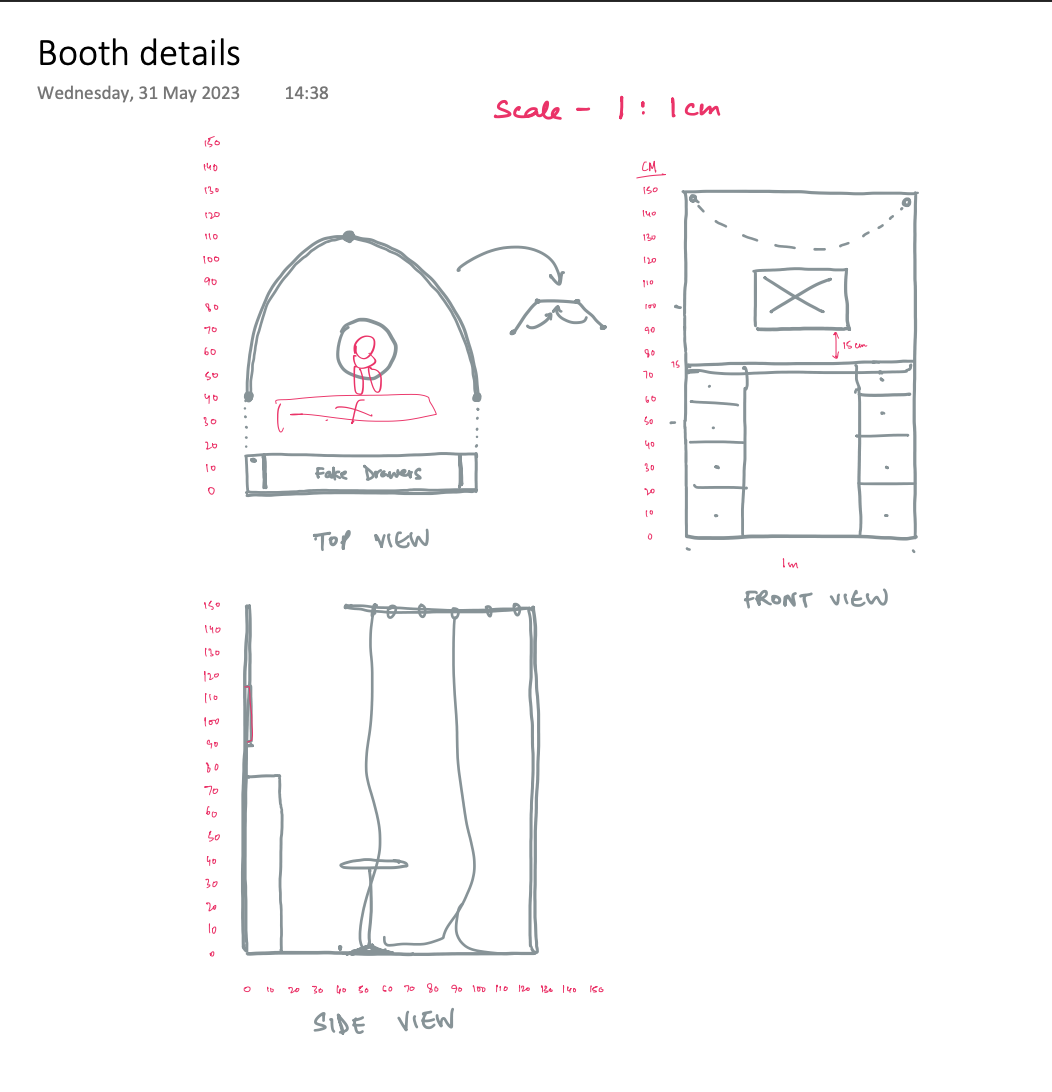

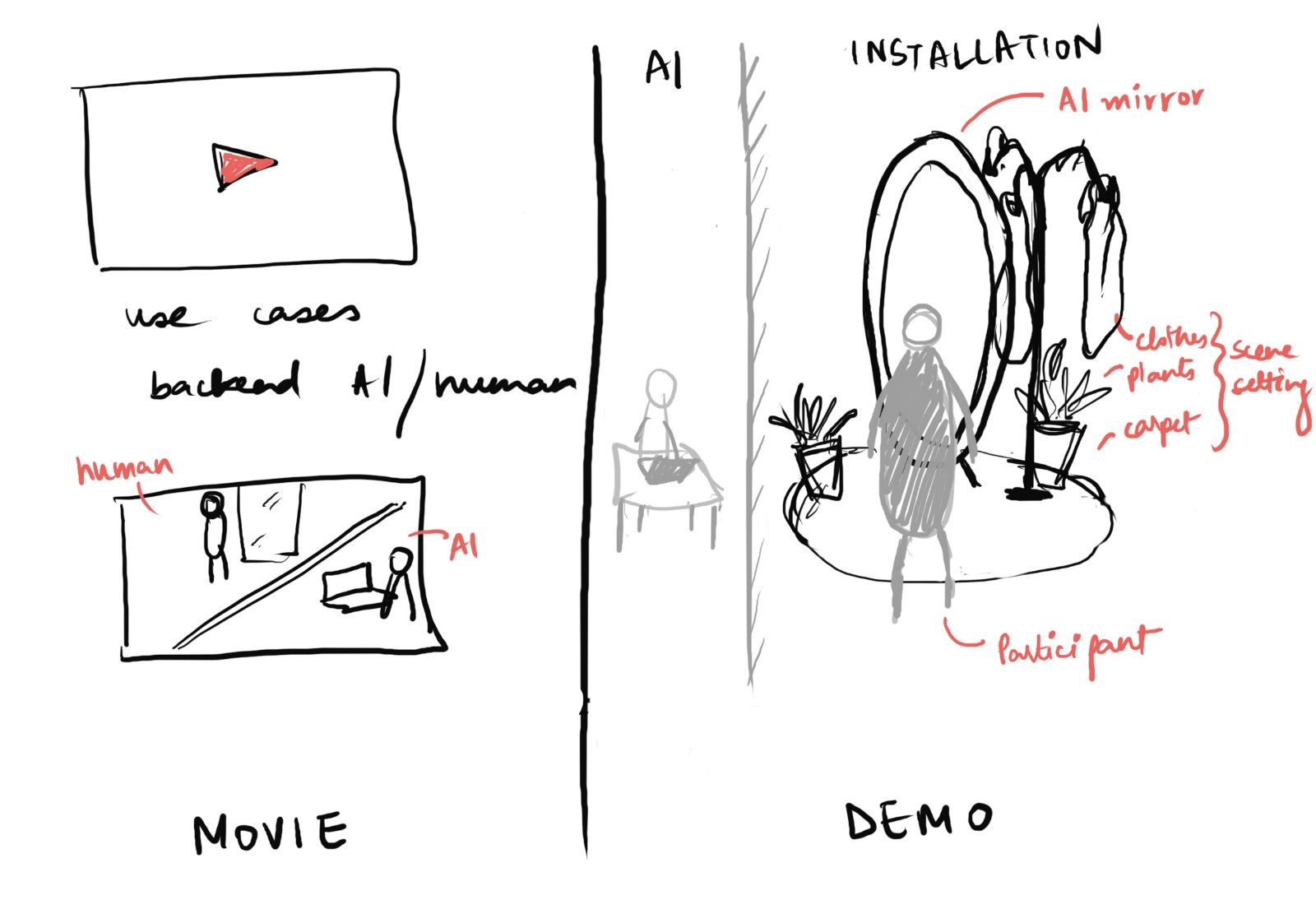

After discussion with our group member, we decided not to make it like a product launch but more like a real situation and the environment is important. We were thinking to make a dresser has a mirror with it, so the scenario is like a getting ready for your date, dress up and also ask AI to mock the date. Also, to simplify the whole setting, we thought just make a curtain to separate them with AI (human).

On the mirror, we thought it might be helpful if we make a digital mirror screen for indicating sound waves, level options, and other interactions. This can be done by using a two-way see through mirror sheet, and put a screen behind the mirror to showcase the interactions. According to the adjustments and changed we made, we had a new storyboard.

Storyboard:

In the meantime, we also conduct testings with our course mates.

Testing:

-

Can guess when is human taking over from chatGPT and it feels more organic and less stressful when it stops asking more direct questions.

-

Not too much key typing awareness.

-

Staging and environment is important.

-

No passion between the conversation.

-

More situated if we have a specific person as a date.

- Not feel like AI all the way through maybe because of the key typing sound.

-

Longer answers in the beginning and gradually gets shorter and shorter.

-

Like it very poetic.

-

Will not feel comfortable if it’s human behind AI, and rather to date with real person.

-

Would make sense if there’s a mirror for me to check myself.

Feedback from another person we tested without pictures:

- A little awkward. Not much room for me to ask question and answer questions.

-

The voice is very confident, would prefer more peaceful voice to be felt less nervous on a first date.

-

Answer is generic.

-

Wouldn’t realize when human takes over.

-

Would prefer go on a real date rather than practice with AI.

Presentation and feedback:

- Maybe not a product launch.

- To make it in a real environment, shoot films in a real house maybe.

- If the levels are necessary?

- If the mirror screen is necessary?

Week 7

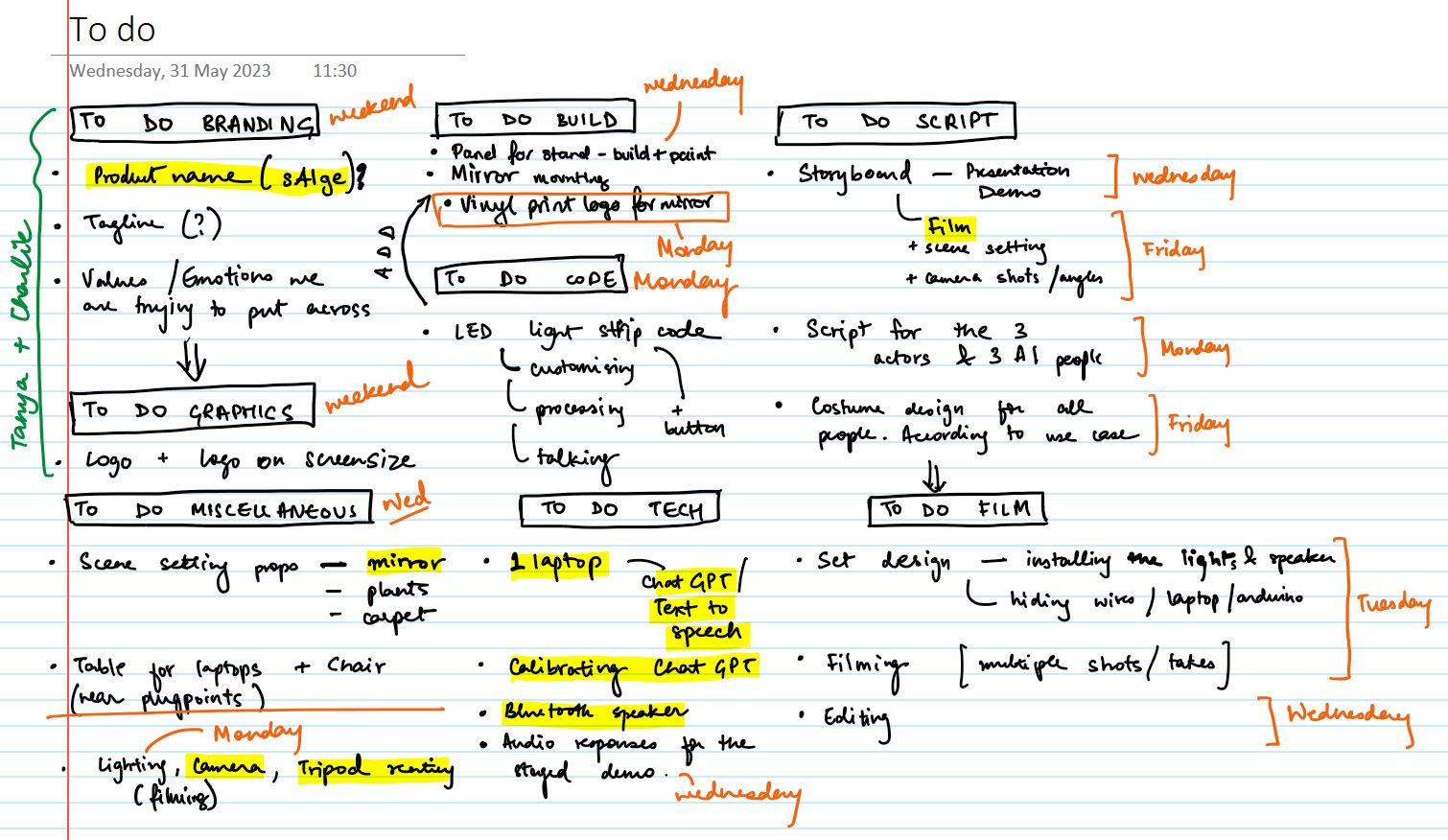

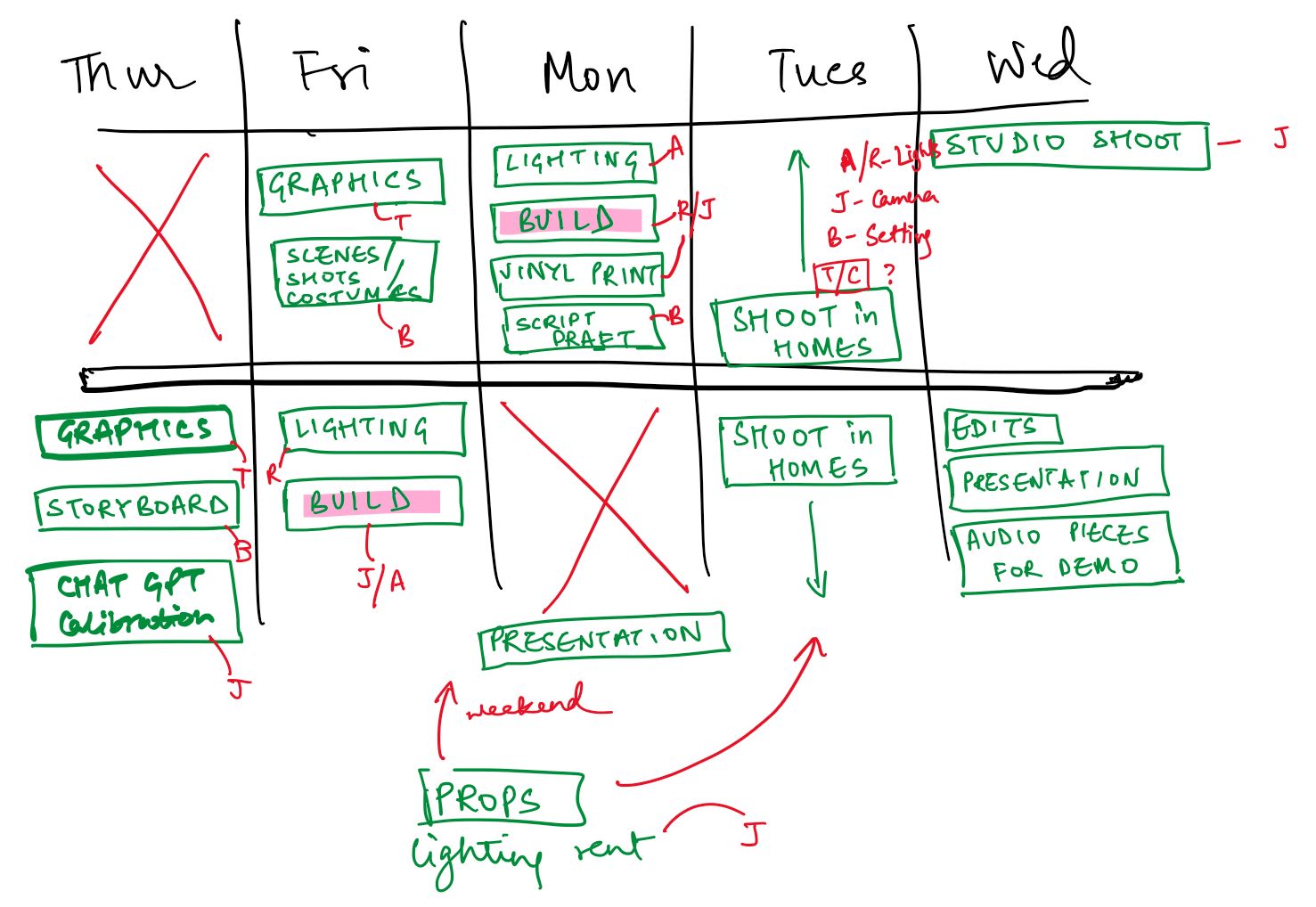

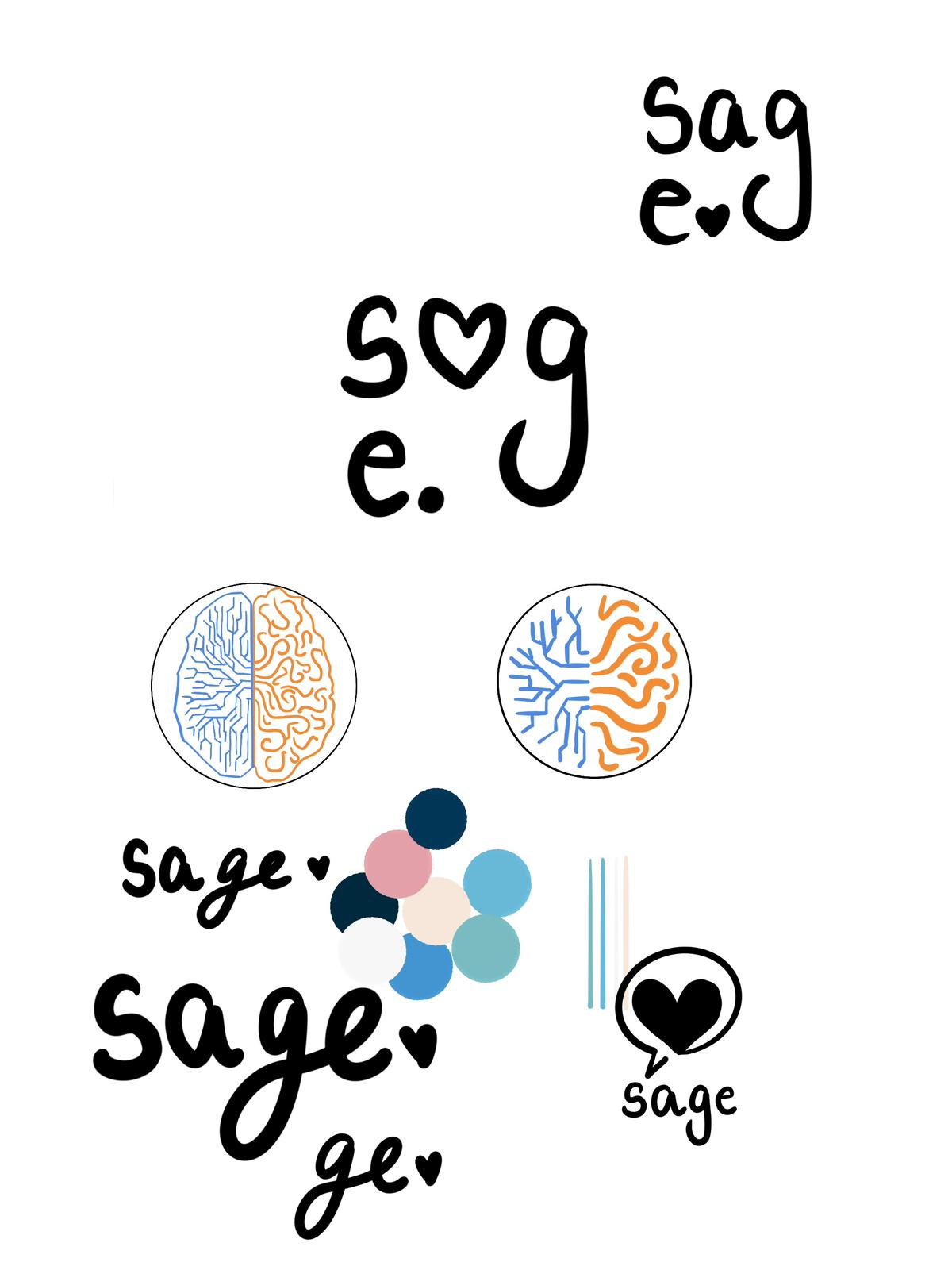

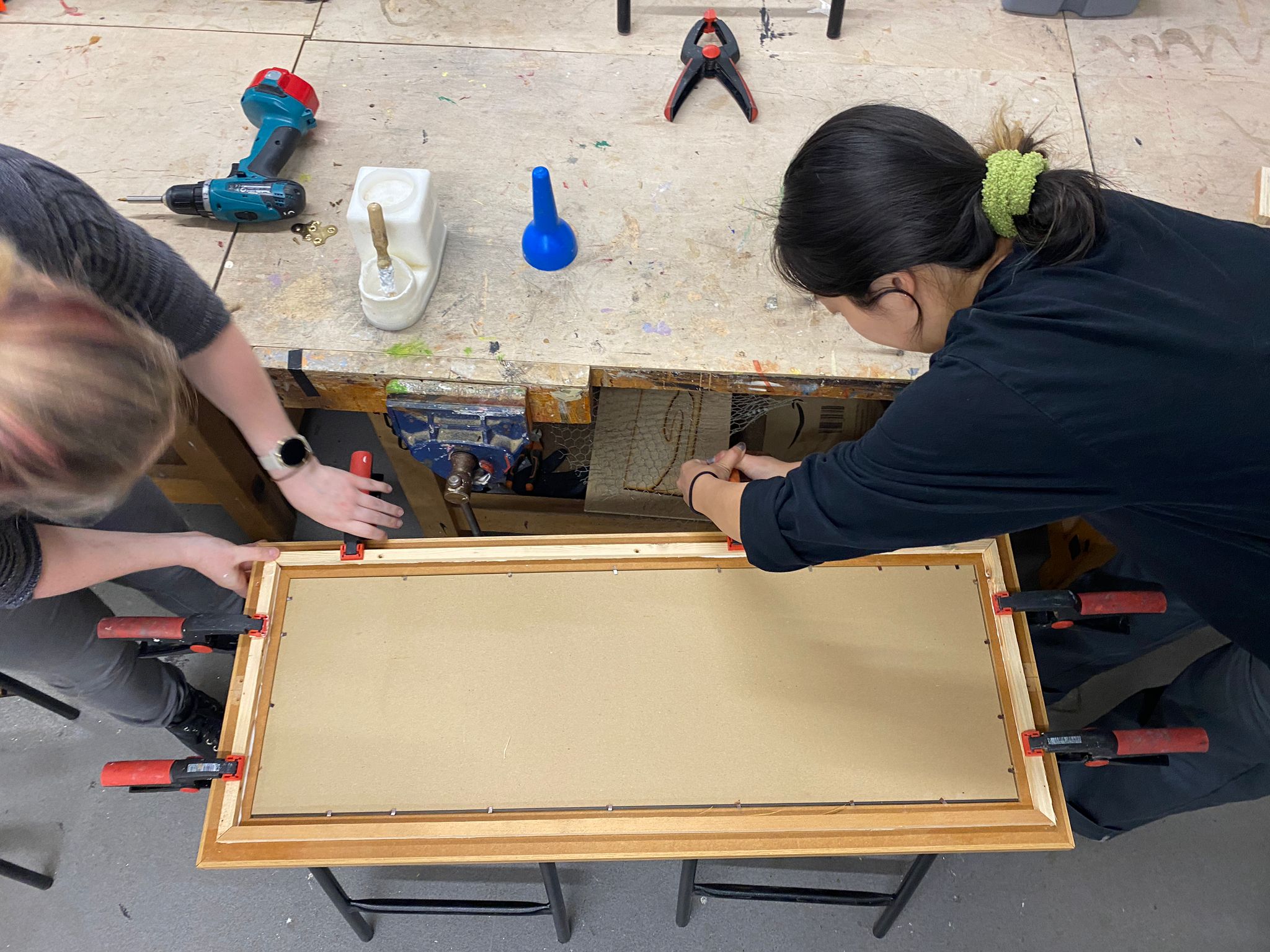

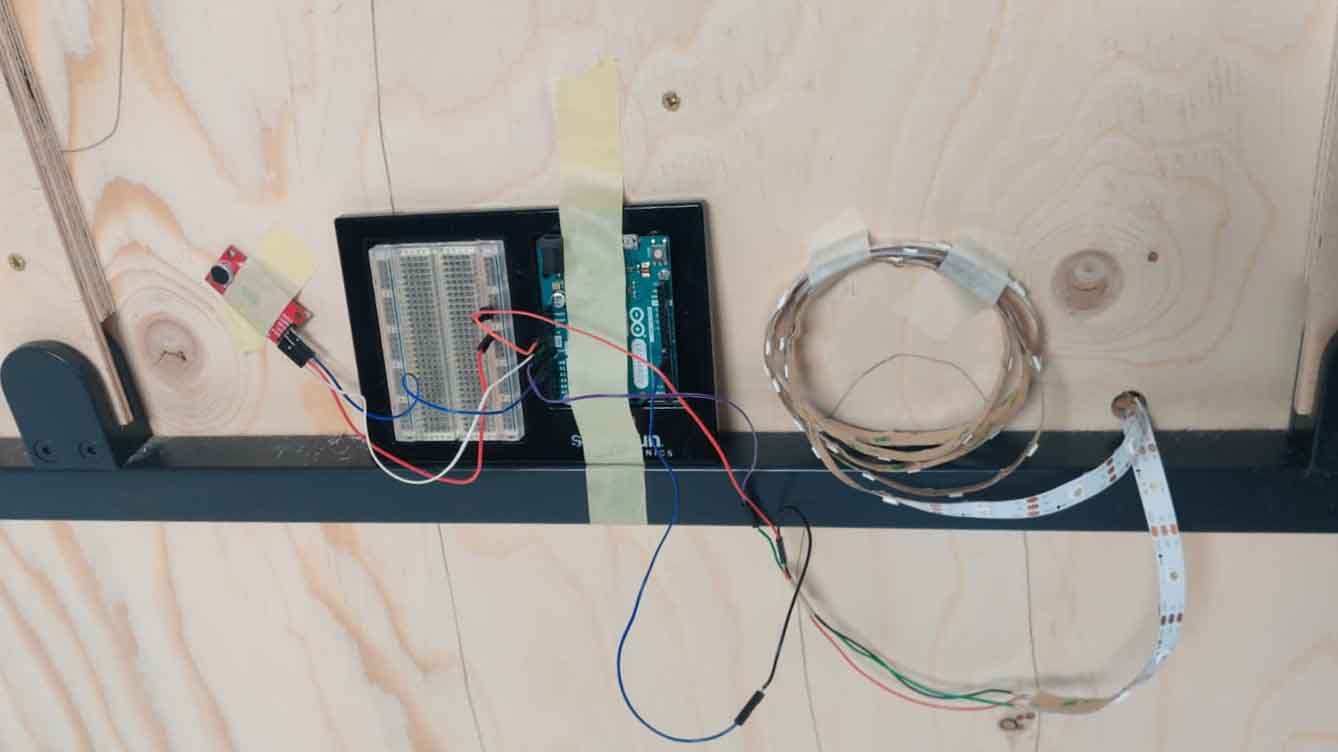

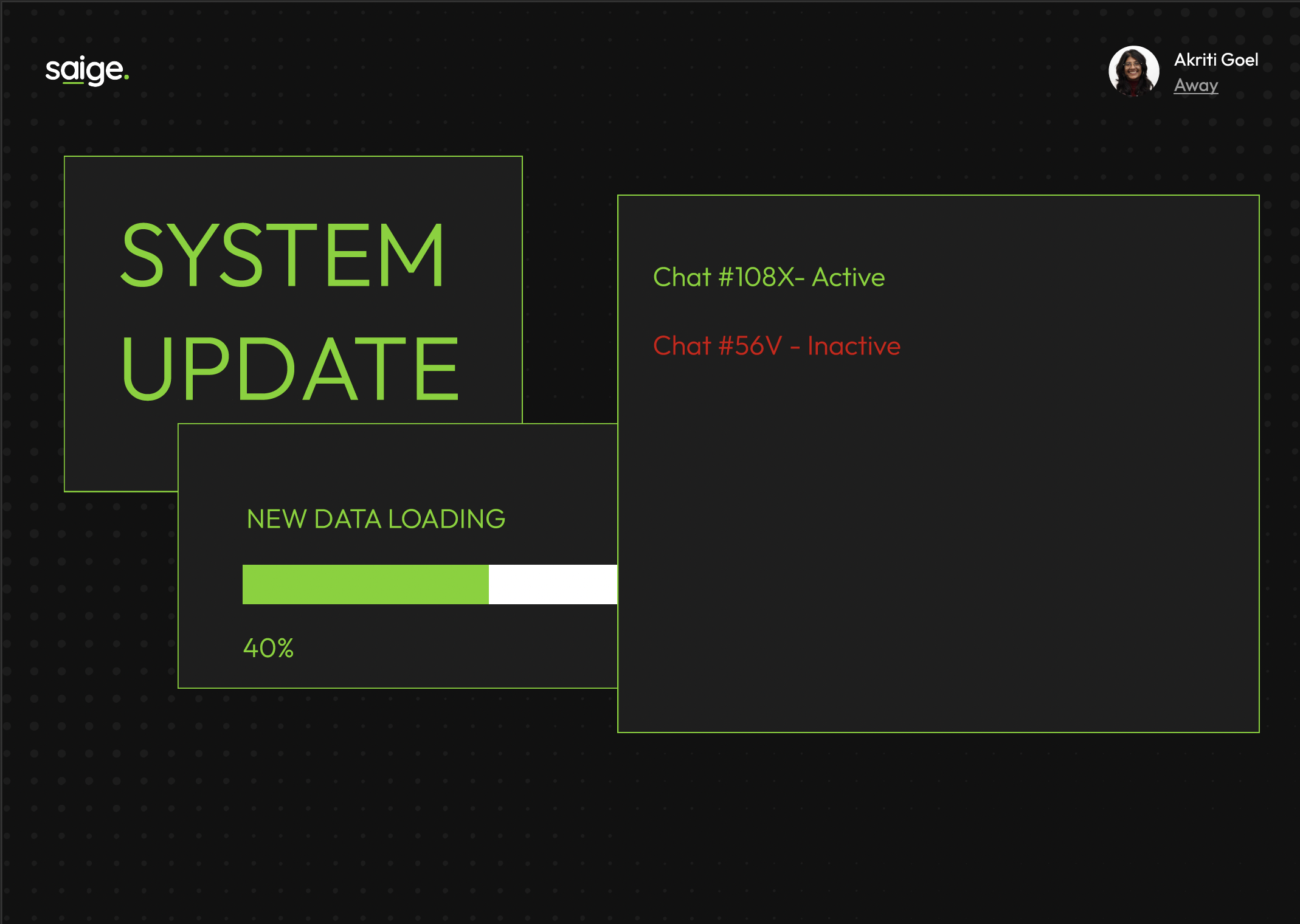

After discussion with the group, we decided to make a short film in a real life environment and think about scenarios other than just dating. In terms of simplifying the physical buildings, we decided to take out the mirror screen thing but just to have a mirror, and include LED lightings that can be interacted with sound from AI. Also, we need to think of a logo for our design, and print them out, stick to the mirrors that we will shoot on. In order to achieve all of these in a short time, we made a plan for the week.

Planning for the week:

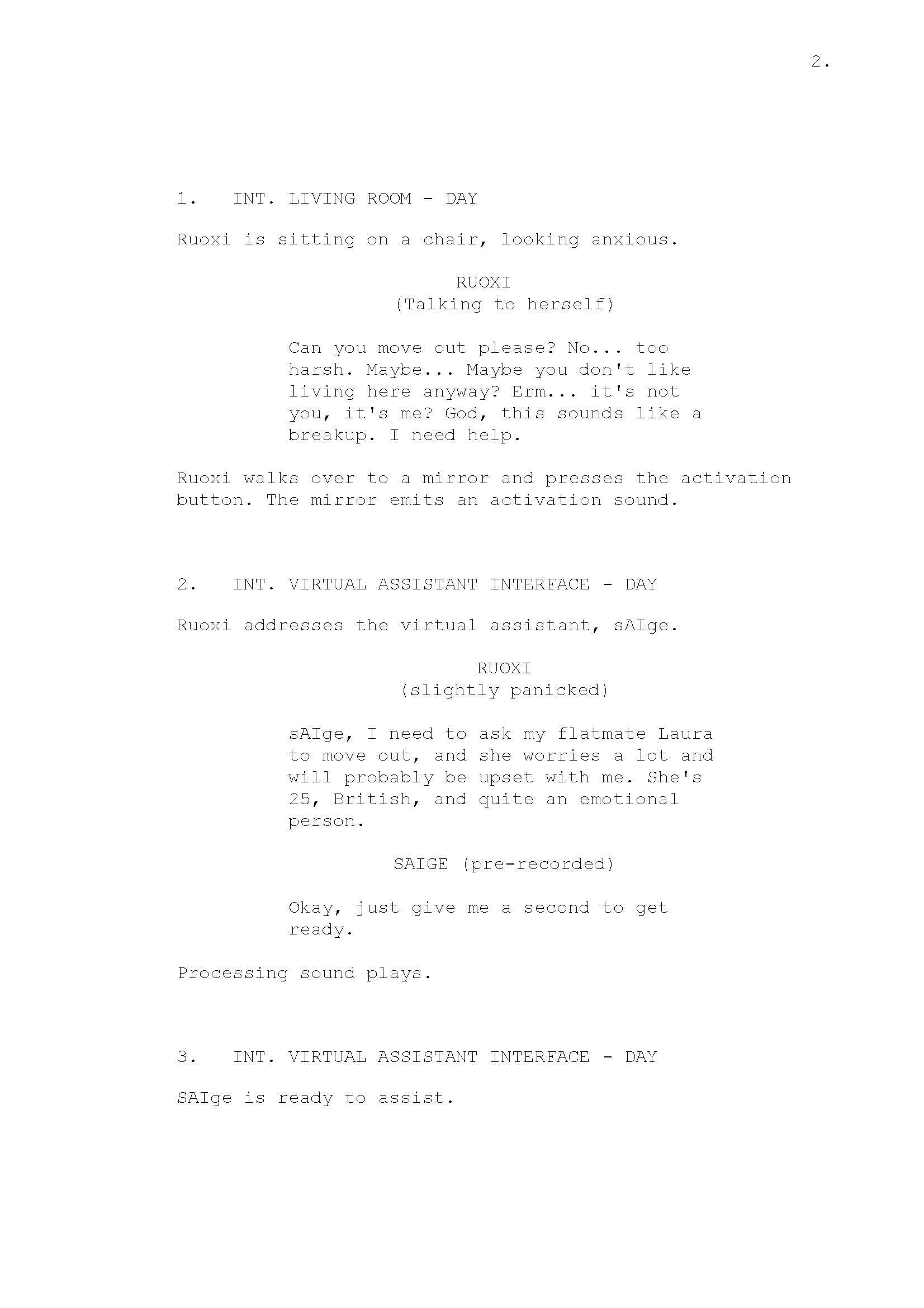

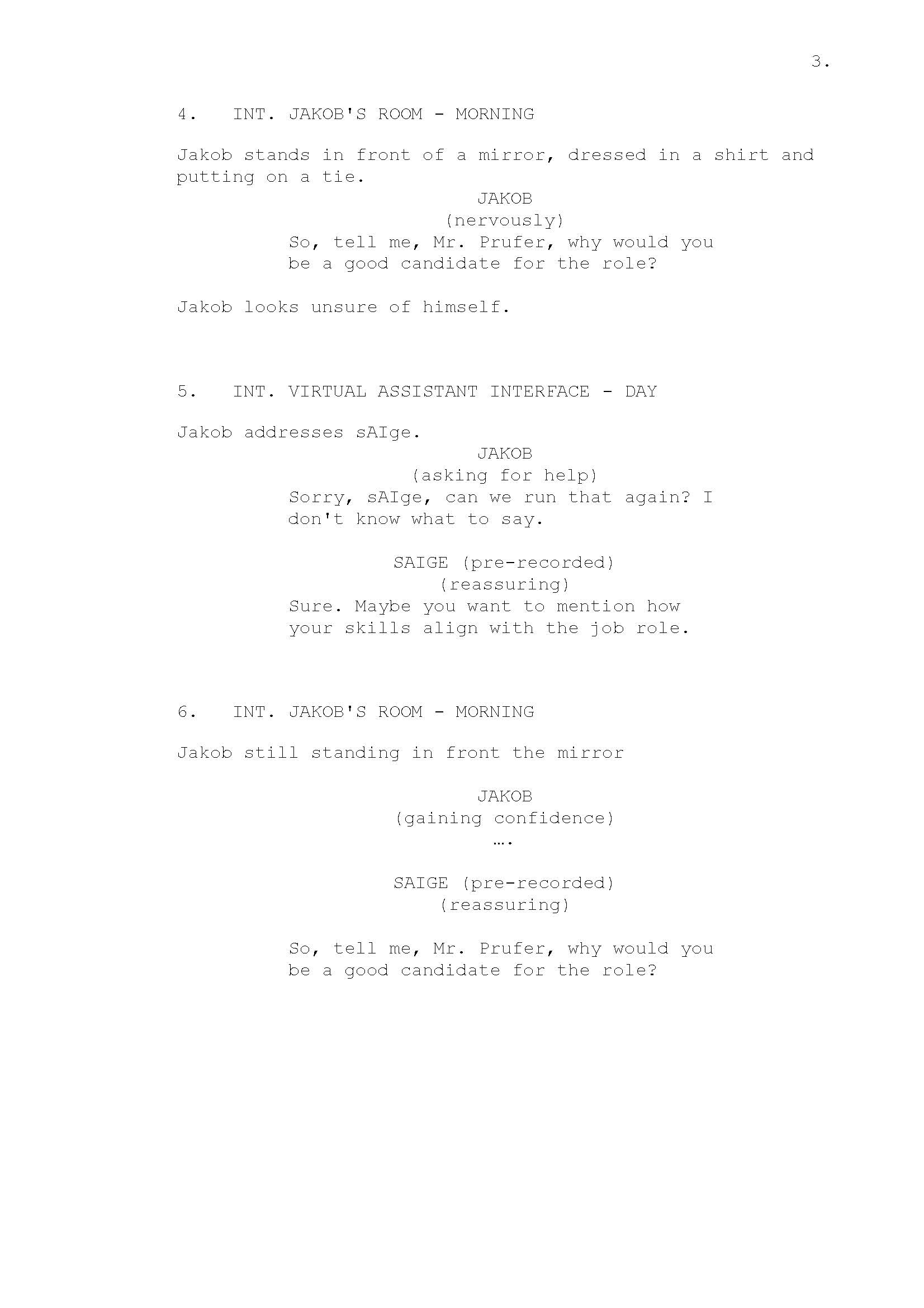

Film storyboard with script:

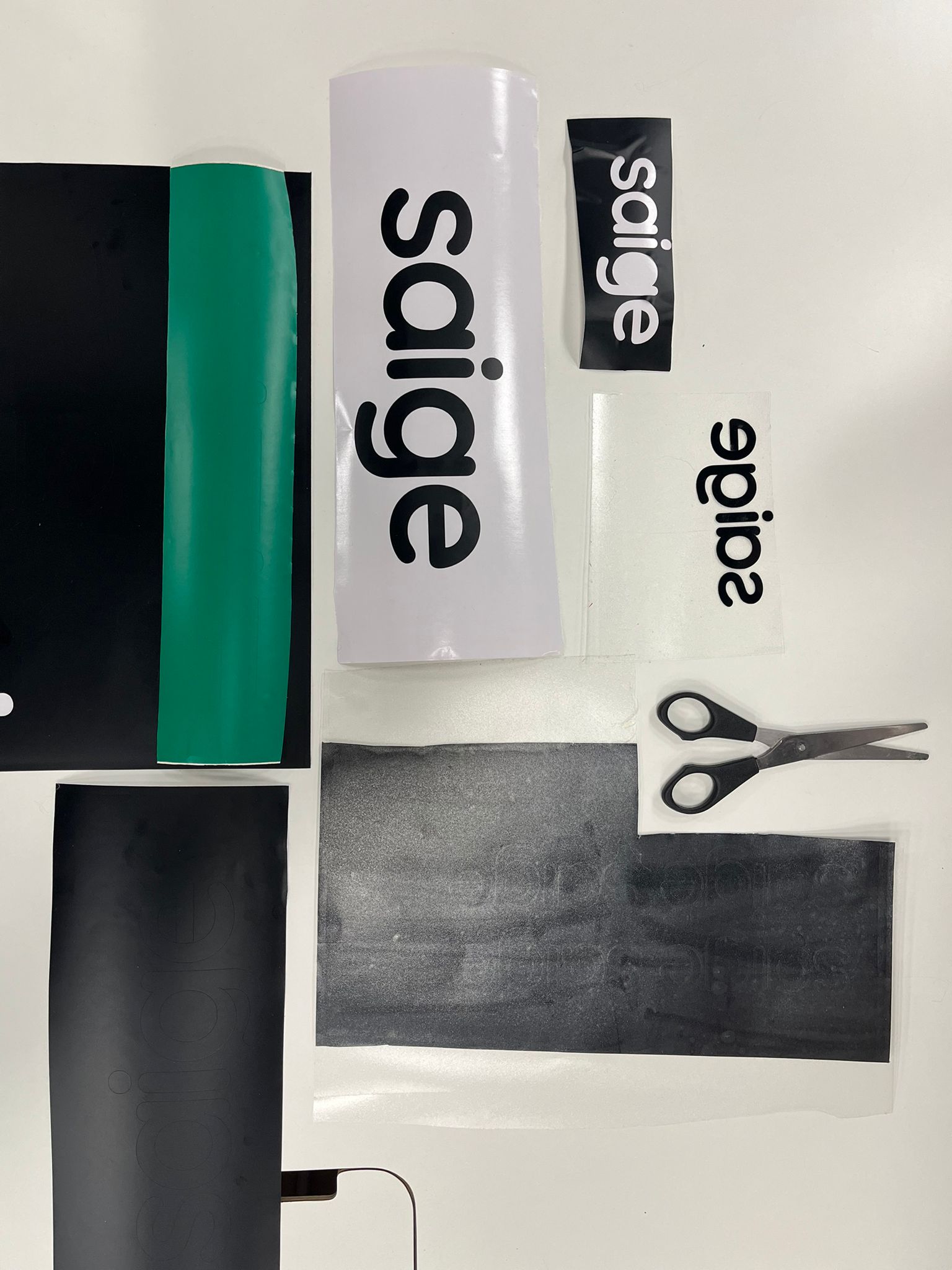

Branding design:

We decided on our logo called “sage” at beginning, but as we found that “sage” is a existing company, we changed it to “saige” as we also love the name and want it to be associated with our AI concept.

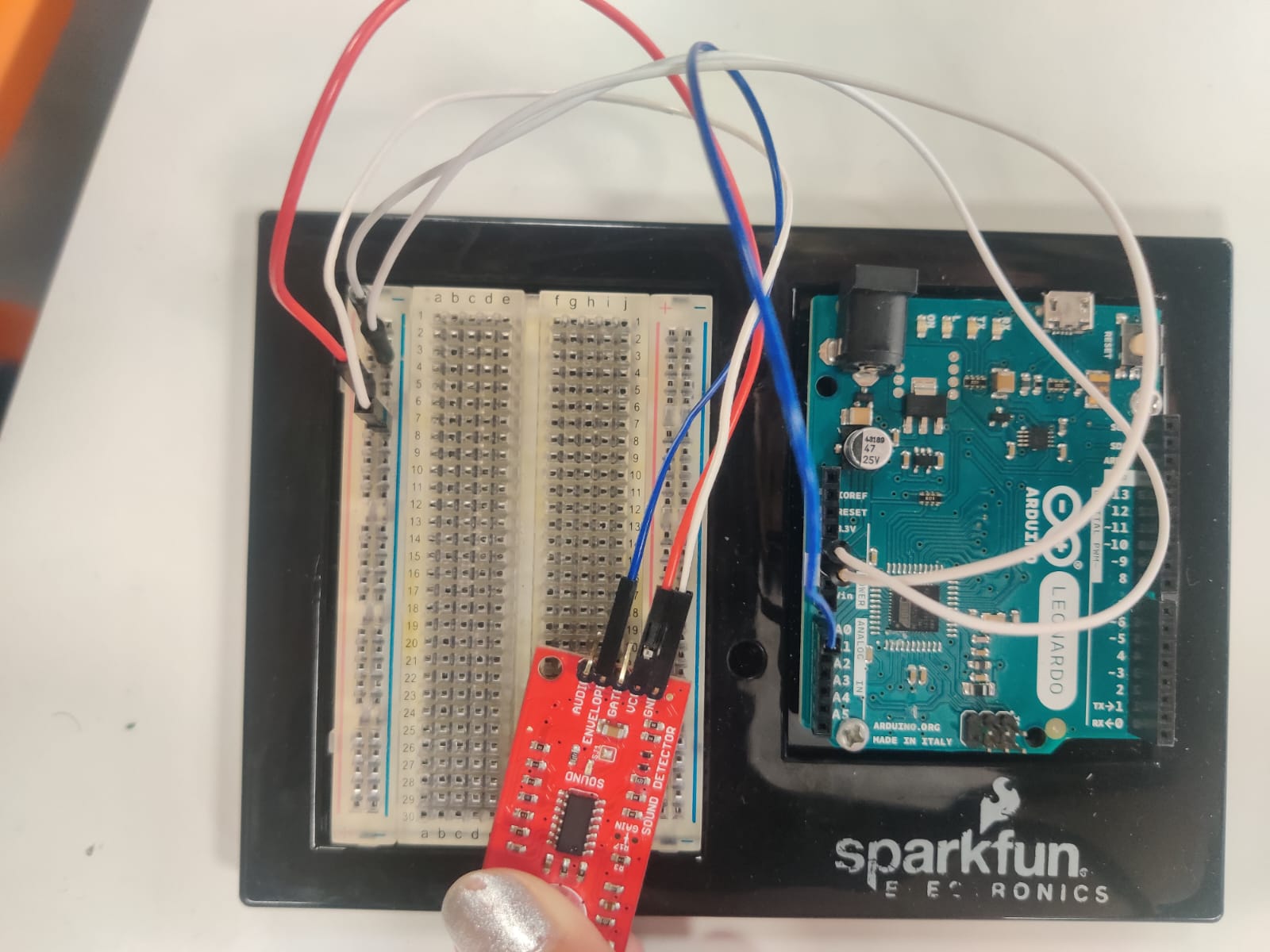

Prototype:

Vinyl print:

Lighting:

Testing:

Sound:

Shooting:

script:

Studio shoot:

UI design:

Final Presentation:

For the final presentation, we want to play the film that’s about four mins, and we also want to showcase our live demo, as well as our research processes. In order to include all of these elements in our seven mins presentation, we decided to make the whole presentation like a performance.

Feedback:

-

The story is very well.

-

Feels like an episode of black mirror.

-

The presentation is very good. The scene we set up is very well.

-

The branding, logo and everything is very consistent. Feel complete and very understandable, and the video is self-explained very well.

-

Could have brought more of our research into the presentation.

-

This could be scale up and even push further.

-

The review of human behind AI, would expect more of the review part and human thinking behind it.

-

Answering brief very well.

-

More question to think about is why does the human qualified to have the job of being AI handling difficult questions?

Personal Reflection:

Our group places a lot of emphasis on human perception, as we believe it is more important to design from a human's perspective rather than focusing solely on AI and data. In addition, the valuable lesson I learned from my last project: the importance of gathering information from real people. I am delighted that I had the opportunity to talk to individuals outside of the university. Thanks to this research, we obtained valuable results that inspired us to continue our work. After completing this project, I realized that during the final stages of creating the prototype and presentation in a group setting, everything can change, and we may not be able to strictly follow the initial plan. We often make final changes at the very last minute. We wanted to include the concept of collective consciousness, which is an important finding from our research, so we planned to have a very brief three-person debate behind AI in the film. However, we somehow lost it at the final shoot. For a collective project, prototyping may be chaotic. We had to make decisions regarding how to modify our design concept to the technological challenges, locations, scheduling, and several other aspects. However, we are all quite pleased with how it turned out and the positive response we received.

Reference:

Braga, Adriana, and Robert K. Logan. 2017. "The Emperor of Strong AI Has No Clothes: Limits to Artificial Intelligence" Information 8, no. 4: 156. https://doi.org/10.3390/info8040156

Browning, J. (2023, March 23). AI Chatbots Don’t Care About Your Social Norms - NOEMA. NOEMA. https://www.noemamag.com/ai-chatbots-dont-care-about-your-social-norms/

Generating Music from text (no date) MusicLM. Available at: https://google-research.github.io/seanet/musiclm/examples/ (Accessed: 10 June 2023).

Jackson, F. (1982) ‘Epiphenomenal qualia’, The Philosophical Quarterly, 32(127), p. 127. doi:10.2307/2960077.

Searle, J. R. (1980), 'Minds, brains, and programs', Behavioral and Brain Sciences 3 (3), 417--457.

Slinerland, E. Directionless Intelligence. In What to Think about Machines that Think; Brockman, J., Ed.; Harper Perennial: New York, NY, USA, 2015; pp. 345–346.

Ustwo (no date) Wayfindr, Wayfindr x ustwo. Available at: https://ustwo.com/work/wayfindr/ (Accessed: 10 June 2023).